Big Data: What is Web Scraping and how to use it – Towards …

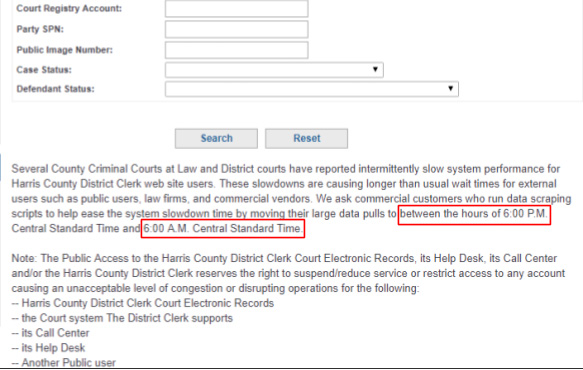

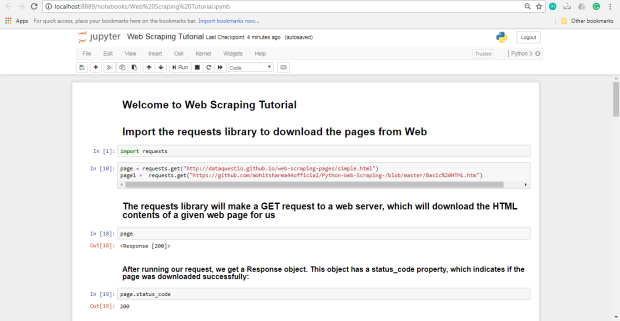

What is web scraping? It is essential for gathering Big Data sets, which are the cornerstone of Big Data analytics, Machine Learning (ML) and tutoring the Artificial Intelligence (AI) hard point is that information is the most valuable commodity in the world (after time, as you cannot buy the time back), as Michael Douglas has said in the famous “Wall Street” movie long before the Internet means that the ones who possess the information make all possible precaution to protect it from copying. In the pre-Internet times it was easy, as copyright legislation is pretty solid in the developed countries. The World Wide Web changed everything, as anybody can copy the text on the page and paste it into another page, and web scrapers are simply the algorithms that can do it much quicker than humans. DISCLAIMER: The following is intended for the Big Data researchers who comply with the permissions from, set the correct User Agent and do not violate the Terms of Service of the sites they Svit has ample experience with scraping the websites for our Big Data projects. We believe there are three levels of web scraping complexity, depending on the amount of JavaScript (JS) you have to tackle:A lucky loiterera) The web pages you need to scrape have simple and clean markup without any JS. In this case, one needs to simply create the “locators” for the data in question. XPath statements are great examples of such locators. b) All the URLs to other websites and pages are direct. Finding only the relevant URLs is the main difficulty here. For example, you can look for the `class` attribute. In such a case the XPath will look like this: `//a[@class=’Your_target_class’]`A skilled professionala) Partial JS rendering. For example, the search results page has all the information, but it is generated by JS. Typically, if you open a specific result, the full data without JS is there. b) Simple pagination. Instead of constantly clicking the “next page” button you can receive pages simply by creating the necessary URL like this:. In the same way, you can, for example, increase the number of results in a single query. c) Simple URL creation rules. The links can be formed by JS, but you can unravel the rule and create them yourself. A Jedi Knight, may the Force be with youa) The page is fully built with JS. There is no way to get the data without running JS. In this case, you should use more sophisticated tools. Selenium or some other WebKit-based tools will get the job done. b) The URLs are formed using JS. The tools from the previous paragraph should solve this problem also, yet there may be a slowdown in processing due to the fact that JS rendering takes additional time. Perhaps you should consider splitting the scrapper and spider and performing such slow operations on a separate handler. c) CAPTCHA is present. Usually, CAPTCHA does not appear immediately and takes several requests. In this case, you can use various proxy services and simply switch IP when the scraper is stopped by CAPTCHA. By the way, these services can be also useful for emulating access from different locations. d) The website has an underlying API with complex rules of data transfer. JS scripts render the pages after referring to the back-end. It is possible that it will be easier to receive data when making queries directly to the back-end. To analyze the operation of scripts, use the Developer Console in your browser. Press F12 and go to the Network is also important to understand the difference between web scraping and data mining. In short, while data scraping can happen in any data array and can be done manually, web scraping or crawling takes place only on the web pages and is performed by special robots — crawlers/scrapers. We have also listed 5 success factors for Big Data mining, where finding the correct and relevant data sources is the most important basis for a successful example, the manufacturer might want to monitor the market tendencies and uncover the actual customer attitudes, without relying on the retailer’s monthly reports. By using web scraping the company can collect a huge data set of the product descriptions on the retailer sites, customer reviews and feedback on the websites of the retailers. Analyzing this data can help the manufacturer provide the retailers with better descriptions for their product, as well as list the problems the end users face with their product and apply their feedback to further improving their product and securing their bottom line through bigger of the scrapers are written in Python to ease the process of further processing of the collected data. We write our scrapers using frameworks and libraries for web crawling, like Scrapy, Ghost, lxml, aio or building the scrapers we must be prepared for dealing with any level of complexity — from a loiterer to a powerful Jedi Knight. This is why we have to validate the data sets prior to building the scrapers for them in order to allocate sufficient resources. In addition, the conditions might change (and often do) during the scraper development, so a skilled data scientist must be prepared to deal with the third level and use their lightsabers at any given the next article of this series, we will tell what measures can be taken in order to protect your website content from unwanted web data crawling. Stay tuned for updates and the best of luck with web scraping to you!

Big Data: What is Web Scraping and how to use it – Towards …

What is web scraping? It is essential for gathering Big Data sets, which are the cornerstone of Big Data analytics, Machine Learning (ML) and tutoring the Artificial Intelligence (AI) hard point is that information is the most valuable commodity in the world (after time, as you cannot buy the time back), as Michael Douglas has said in the famous “Wall Street” movie long before the Internet means that the ones who possess the information make all possible precaution to protect it from copying. In the pre-Internet times it was easy, as copyright legislation is pretty solid in the developed countries. The World Wide Web changed everything, as anybody can copy the text on the page and paste it into another page, and web scrapers are simply the algorithms that can do it much quicker than humans. DISCLAIMER: The following is intended for the Big Data researchers who comply with the permissions from, set the correct User Agent and do not violate the Terms of Service of the sites they Svit has ample experience with scraping the websites for our Big Data projects. We believe there are three levels of web scraping complexity, depending on the amount of JavaScript (JS) you have to tackle:A lucky loiterera) The web pages you need to scrape have simple and clean markup without any JS. In this case, one needs to simply create the “locators” for the data in question. XPath statements are great examples of such locators. b) All the URLs to other websites and pages are direct. Finding only the relevant URLs is the main difficulty here. For example, you can look for the `class` attribute. In such a case the XPath will look like this: `//a[@class=’Your_target_class’]`A skilled professionala) Partial JS rendering. For example, the search results page has all the information, but it is generated by JS. Typically, if you open a specific result, the full data without JS is there. b) Simple pagination. Instead of constantly clicking the “next page” button you can receive pages simply by creating the necessary URL like this:. In the same way, you can, for example, increase the number of results in a single query. c) Simple URL creation rules. The links can be formed by JS, but you can unravel the rule and create them yourself. A Jedi Knight, may the Force be with youa) The page is fully built with JS. There is no way to get the data without running JS. In this case, you should use more sophisticated tools. Selenium or some other WebKit-based tools will get the job done. b) The URLs are formed using JS. The tools from the previous paragraph should solve this problem also, yet there may be a slowdown in processing due to the fact that JS rendering takes additional time. Perhaps you should consider splitting the scrapper and spider and performing such slow operations on a separate handler. c) CAPTCHA is present. Usually, CAPTCHA does not appear immediately and takes several requests. In this case, you can use various proxy services and simply switch IP when the scraper is stopped by CAPTCHA. By the way, these services can be also useful for emulating access from different locations. d) The website has an underlying API with complex rules of data transfer. JS scripts render the pages after referring to the back-end. It is possible that it will be easier to receive data when making queries directly to the back-end. To analyze the operation of scripts, use the Developer Console in your browser. Press F12 and go to the Network is also important to understand the difference between web scraping and data mining. In short, while data scraping can happen in any data array and can be done manually, web scraping or crawling takes place only on the web pages and is performed by special robots — crawlers/scrapers. We have also listed 5 success factors for Big Data mining, where finding the correct and relevant data sources is the most important basis for a successful example, the manufacturer might want to monitor the market tendencies and uncover the actual customer attitudes, without relying on the retailer’s monthly reports. By using web scraping the company can collect a huge data set of the product descriptions on the retailer sites, customer reviews and feedback on the websites of the retailers. Analyzing this data can help the manufacturer provide the retailers with better descriptions for their product, as well as list the problems the end users face with their product and apply their feedback to further improving their product and securing their bottom line through bigger of the scrapers are written in Python to ease the process of further processing of the collected data. We write our scrapers using frameworks and libraries for web crawling, like Scrapy, Ghost, lxml, aio or building the scrapers we must be prepared for dealing with any level of complexity — from a loiterer to a powerful Jedi Knight. This is why we have to validate the data sets prior to building the scrapers for them in order to allocate sufficient resources. In addition, the conditions might change (and often do) during the scraper development, so a skilled data scientist must be prepared to deal with the third level and use their lightsabers at any given the next article of this series, we will tell what measures can be taken in order to protect your website content from unwanted web data crawling. Stay tuned for updates and the best of luck with web scraping to you!

Big Data: What Is Web Scraping, and Why Does It Matter?

To obtain useful information effectively and make the most out of it are essential in business decision-making. However, with more than 2 billion web pages on the internet today, manually collecting big data is not feasible. Here is a simple solution: web scraping.

Table of content

What is web scraping?

What are the advantages of web scraping?

What are the scenarios we can benefit from web scraping?

In conclusion

Web scraping is the technique to fetch a large volume of public data from websites. It automates the collection of data and converts the scraped data into formats of your choice, such as HTML, CSV, Excel, JSON, txt.

The process of web scraping primarily consists of 3 parts:

Parse through an HTML website

Extract the data needed

Store the data

The major way to scrape the data is through programming. Because of that, many companies need to hire experienced developers to crawl the websites. Whereas, for those who don’t have a big budget and lack coding skills, web scraping tools come in handy. Both scraping with programming languages and using web scraping tools share some advantages in common.

Photo by Helloquence on Unsplash

1 Data extraction is automated

Copying and pasting the data manually is absolutely a pain. Actually, it is simply not possible to copy/paste a large amount of data when one needs to extract from millions of web pages on a regular basis. Web scraping can extract data automatically with zero human factors included.

2 Speediness

When the work is automated, data is collected at a high speed. Tasks that used to take months to complete can now be done within a few minutes.

3 The information collected is much more accurate

Another advantage of web scraping is that it greatly increases the accuracy of data extraction, as it eliminates human error in this process.

4 It’s a cost-effective method (sometimes even free)

A common myth about web scraping is that people need to either learn how to code by themselves or hire professionals to do it, and both require large investments in time and money. The truth is quite on the contrary: coding is not a must to scrape websites since there are dozens of web scraping tools & services available on the market. Also, it is an affordable solution for businesses with limited budgets. Some web scraping tools offer free plans on small volume extraction, and the market price for large volume data extraction is no higher than $100 a month.

5 Get clean and structured data

After gathering data there usually follows cleaning and reorganizing it, because the data collected is not structured and ready to use. Web scraping tools convert unstructured and semi-structured data into structured data, and web page information is reorganized into presentable formats.

Web scraping is widely used across industries for the above advantages. Here, I’d like to introduce some of the common scenarios.

Competitor Monitoring

To keep tabs on competitors’ strategies, businesses need to get fresh data from their competitors. This helps reveal insights into pricing, advertising, social media strategy and many more.

For example, in the E-commerce industry, online store owners collect product information such as the sellers, images, and prices from websites like Amazon, Bestbuy, eBay, and AliExpress. This way, they can get first-hand market information and adjust their business strategy accordingly.

>>Youtube Video Tutorial: Scrape product data from Amazon

Social media Sentiment Analysis

Nowadays almost everyone owns at least one account on social media platforms like Facebook, Twitter, Instagram, and YouTube. These platforms not only connect us with each other, but also they provide free space for us to express opinions publicly. We are so used to commenting online about things, such as a person, a product, a brand, and a campaign. Therefore, people collect comments and analyze their sentiments to help understand public opinions better.

In an article entitled Scraping Twitter and Sentiment Analysis using Python, Ashley Weldon collected more than 10k tweets about Donald Trump and used Python to analyze the underlying sentiment. The result showed that the negative words in these tweets are way more diverse than the positive ones, which further indicated that people supporting him were generally less educated than people who disliked him.

Similarly, performing sentiment analysis allows businesses to know what their customers like or dislike about them, which helps them improve their product or customer service.

Product Trend Monitoring

In the business world, those who see the furthest ahead (and most accurately) are likely to win the competition. Product data empowers companies to predict the future of market trends more accurately.

In the case of the retailing industry, online fashion retailers scrape detailed product information to ensure an accurate estimate of demand. With a more thorough understanding of demand, there will be larger margins, faster-moving inventories, and smarter supply chains, which leads to higher income in the end.

Monitoring MAP Compliance

MAP compliance is a method for manufacturers to monitor retailers. In the retailing and manufacturing industries, manufacturers need to monitor retailers and make sure they comply with the lowest price. People need to keep track of the prices to stay competitive in the cut-throat market. With the help of web scraping, visiting all the websites and collecting the data are much more effective.

Collect hotel & restaurant business information

Another example of web scraping usage would be in the hospitality and tourism industry. Hotel consultants collect essential hotel information such as pricing, room types, amenities, locations from online travel agencies (Booking, TripAdvisor, Expedia, etc) to know about the general market price in a region. From there, they can improve the strategy for existing hotels or develop a strategy for starting new hotels. They also scrape hotel reviews and do sentiment analysis to know how the customers feel about their accommodation experience.

Here’s a video about my personal experience of using TripAdvisor web scraping templates to collect hotel information when I was doing an internship in Spain. It’s not with the best audio quality but you may check it out.

The same strategy applies to the dining industry. People collect restaurant information from Yelp, such as the names of the restaurants, categories, ratings, addresses, phone numbers, the price range to get an idea of the market they are targeting.

News Monitoring

Every minute, there are huge amounts of news generated global wide. Whether it is about a political scandal, a natural disaster or a wide-spread disease, it’s not practical for anyone to read every piece of news from different sources. Web scraping makes it possible to extract news, announcement, and other relevant data from official and unofficial sources in a timely manner.

News monitoring helps notify important events happening all around the globe, and it assists governments in reacting to emergencies in no time. For instance, during the 2019 Coronavirus (SARS-CoV-2) outbreak, the numbers of confirmed cases, suspected infections, and death tolls were constantly changing. Researchers can scrape the live & death statistics from China’s government official website in real-time to further study and analyze the data. What’s more, when countless reports and rumors were generated at the same time, the government was able to detect rumors among the facts quickly and clarify them, which reduces the possibility of unnecessary panic and even social chaos.

Conclusions

In this article, I’ve covered some basics about web scraping and how it is being used in different industries. Note that scraping websites doesn’t necessarily require programming skills, you can always choose to seek help from web scraping tools & service providers like Octoparse. They not only provide ready-to-use web scraping templates and help build your scraper, but also provide customized data extraction service. If you have any questions regarding Octoparse, you can email

Curious to find out how web scraping can help in growing your business? Check out 30 Ways to Grow Your Business with Web Scraping.

Author: Milly

Artículo en español: ¿Qué Es El Web Scraping y Por Qué Es Importante? También puede leer artículos de web scraping en El Website Oficial

Top 20 Web Scraping Tools to Scrape the Websites Quickly

Top 30 Big Data Tools for Data Analysis

Web Scraping Templates Take Away

How to Build a Web Crawler – A Guide for Beginners

Video: Create Your First Scraper with Octoparse 7. X

Frequently Asked Questions about big data web scraping

Is web scraping Big data?

By using web scraping the company can collect a huge data set of the product descriptions on the retailer sites, customer reviews and feedback on the websites of the retailers.Feb 9, 2018

What is scraping in big data?

Web scraping is the technique to fetch a large volume of public data from websites. It automates the collection of data and converts the scraped data into formats of your choice, such as HTML, CSV, Excel, JSON, txt.Jan 25, 2021

Is web scraping a crime?

Web scraping itself is not illegal. As a matter of fact, web scraping – or web crawling, were historically associated with well-known search engines like Google or Bing. These search engines crawl sites and index the web. … A great example when web scraping can be illegal is when you try to scrape nonpublic data.Nov 17, 2017