Data Crawling vs Data Scraping – The Key Differences

One of our favourite quotes has been, ‘If a problem changes by an order, it becomes a different problem’ and in this lies the answer to – Data Crawling vs Data Scraping.

Data Crawling means dealing with large data sets where you develop your crawlers (or bots) which crawl to the deepest of the web pages. Data scraping, on the other hand, refers to retrieving information from any source (not necessarily the web). It’s more often the case that irrespective of the approaches involved, we refer to extracting data from the web as scraping (or harvesting) and that’s a serious misconception.

Data Crawling vs Data Scraping – Key Differences

1. Scraping data does not necessarily involve the web. Data scraping tools that help in data scraping could refer to extracting information from a local machine, a database. Even if it is from the internet, a mere “Save as” link on the page is also a subset of the data scraping universe. Data crawling, on the other hand, differs immensely in scale as well as in range. Firstly, crawling = web crawling which means on the web, we can only “crawl” data. Programs that perform this incredible job are called crawl agents or bots or spiders (please leave the other spider in spiderman’s world). Some web spiders are algorithmically designed to reach the maximum depth of a page and crawl them iteratively (did we ever say crawl? ). While both seem different, web scraping vs web crawling is mostly the same.

2. The web is an open world and the quintessential practising platform of our right to freedom. Thus a lot of content gets created and then duplicated. For instance, the same blog might be posted on different pages and our spiders don’t understand that. Hence, data de-duplication (affectionately dedup) is an integral part of web data crawling service. This is done to achieve two things — keep our clients happy by not flooding their machines with the same data more than once; and saving our servers some space. However, deduplication is not necessarily a part of web data scraping.

3. One of the most challenging things in the web crawling space is to deal with the coordination of successive crawls. Our spiders have to be polite with the servers, that they do not piss them off when hit. This creates an interesting situation to handle. Over some time, our spiders have to get more intelligent (and not crazy! ). They get to develop learning to know when and how much to hit a server, how to crawl data feeds on its web pages while complying with its politeness policies. While both seem different, web scraping vs web crawling is mostly the same.

4. Finally, different crawl agents are used to crawling different websites and hence you need to ensure they don’t conflict with each other in the process. This situation never arises when you intend to just crawl data.

Data ScrapingData CrawlingInvolves extracting data from varioussources including webRefers to downloading pages from thewebCan be done at any scaleMostly done at a large scaleDeduplication is not necessarily a partDeduplication is an essential partNeeds crawl agent and parserNeeds only crawl agent

On a concluding note, when talking about web scraping vs web crawling. ‘Scraping’ represents a very superficial node of crawling which we call extraction, and that again requires few algorithms and some automation in place.

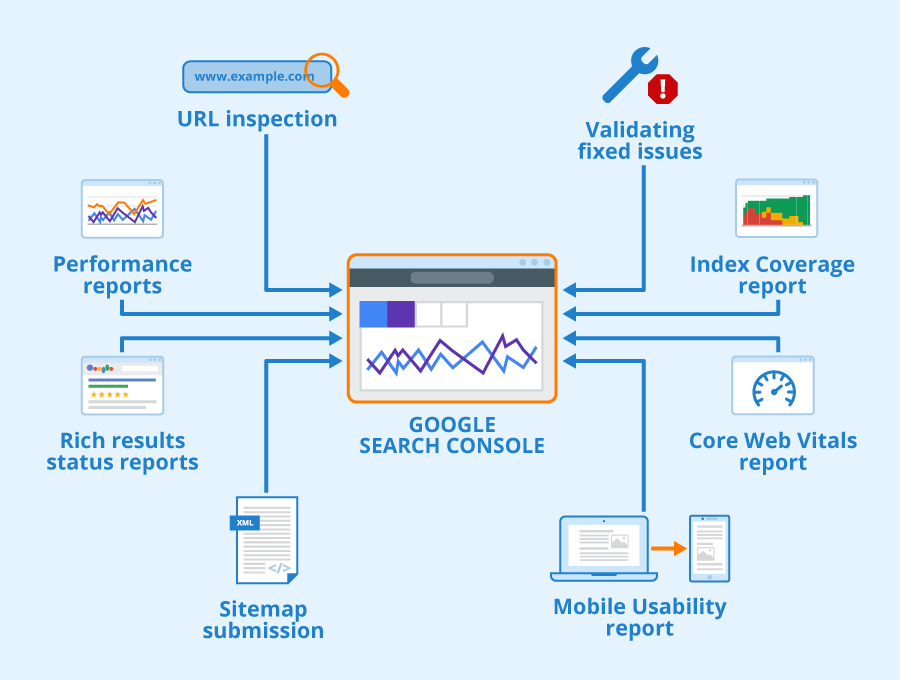

Is web crawling legal? – Towards Data Science

Photo by Sebastian Pichler on UnsplashWeb crawling, also known as web scraping, data scraping or spider, is a computer program technique used to scrape a huge amount of data from websites where regular-format data can be extracted and processed into easy-to-read structured crawling basically is how the internet functions. For example, SEO needs to create sitemaps and gives their permissions to let Google crawl their sites in order to make higher ranks in the search results. Many consultant companies would hire companies to specialize in web scraping to enrich their database so as to provide professional service to their is really hard to determine the legality of web scraping in the era of the digitized crawling can be used in the malicious purpose for example:Scraping private or classified information. Disregard of the website’s terms and service, scrape without owners’ abusive manner of data requests would lead web server crashes under additionally heavy is important to note that a responsible data service provider would refuse your request if:The data is private which would need a username and passcodesThe TOS (Terms of Service) explicitly prohibits the action of web scrapingThe data is copyrightedViolation of the Computer Fraud and Abuse Act (CFAA). Violation of the Digital Millennium Copyright Act (DMCA)Trespass to “just scraped a website” may cause unexpected consequences if you used it probably heard of the HiQ vs Linkedin case in 2017. HiQ is a data science company that provides scraped data to corporate HR departments. Linkedin then sent desist letter to stop HiQ scraping behavior. HiQ then filed a lawsuit to stop Linkedin from blocking their access. As a result, the court ruled in favor of HiQ. It is because that HiQ scrapes data from the public profiles on Linkedin without logging in. That said, it is perfectly legal to scrape the data which is publicly shared on the ’s take another example to illustrate in what case web scraping can be harmful. The law case eBay v. Bidder’s Edge. If you’re doing web crawling for your own purposes, it is legal as it falls under fair use doctrine. The complications start if you want to use scraped data for others, especially commercial purposes. Quoted from, 100 1058 (N. D. Cal. 2000), was a leading case applying the trespass to chattels doctrine to online activities. In 2000, eBay, an online auction company, successfully used the ‘trespass to chattels’ theory to obtain a preliminary injunction preventing Bidder’s Edge, an auction data aggregation, from using a ‘crawler’ to gather data from eBay’s website. The opinion was a leading case applying ‘trespass to chattels’ to online activities, although its analysis has been criticized in more recent long as you are not crawling at a disruptive rate and the source is public you should be fine. I suggest you check the websites you plan to crawl for any Terms of Service clauses related to scraping their intellectual property. If it says “no scraping or crawling”, you should respect ggestion:Scrape discreetly, check “” before you start scrapingGo conservative. Aggressively asking for data can burden the internet server. An ethical way is to be gentle. No one wants to crash the the data wisely. Don’t duplicate the data. You can generate insight from collected data, and help Your business out to the owner of the website before you start ’t randomly pass scraped data to anyone. If it is valuable data, keep it secure.

Best 3 Ways to Crawl Data from a Website | Octoparse

The need for crawling web data has become larger in the past few years. The data crawled can be used for evaluation or prediction in different fields. Here, I’d like to talk about 3 methods we can adopt to crawl data from a website.

1. Use Website APIs

Many large social media websites, like Facebook, Twitter, Instagram, StackOverflow provide APIs for users to access their data. Sometimes, you can choose the official APIs to get structured data. As the Facebook Graph API shows below, you need to choose fields you make the query, then order data, do the URL Lookup, make requests and etc. To learn more, you can refer to

2. Build your own crawler

However, not all websites provide users with APIs. Certain websites refuse to provide any public APIs because of technical limit or other reasons. Someone may propose RSS feeds, but because they put a limit on their use, I will not suggest or make further comments on it. In this case, what I want to discuss is that we can build a crawler on our own to deal with this situation.

How does a crawler work? A crawler, put it another way, is a method to generate a list of URLs that you can feed through your extractor. The crawlers can be defined as tools to find the URLs. You first give the crawler a webpage to start, and they will follow all these links on that page. Then this process will keep going on in a loop.

Read about:

Believe It Or Not, PHP Is Everywhere

The Best Programming Languages for Web Crawler: PHP, Python or

How to Build a Crawler to Extract Web Data without Coding Skills in 10 Mins

Then, we can proceed with building our own crawler. It’s known that Python is an open-source programming language, and you can find many useful functional libraries. Here, I suggest the BeautifulSoup (Python Library) for the reason that it is easier to work with and possesses many intuitive characters. More exactly, I will utilize two Python modules to crawl the data.

BeautifulSoup does not fetch the web page for us. That’s why I use urllib2 to combine with the BeautifulSoup library. Then, we need to deal with HTML tags to find all the links within page’s tags and the right table. After that, iterate through each row (tr) and then assign each element of tr (td) to a variable and append it to a list. Let’s first look at the HTML structure of the table (I am not going to extract information for table heading

By taking this approach, your crawler is customized. It can deal with certain difficulties met in the API extraction. You can use the proxy to prevent it from being blocked by some websites and etc. The whole process is within your control. This method should make sense for people with coding skills. The data frame you crawled should be like the figure below.

3. Take advantage of ready-to-use crawler tools

However, to crawl a website on your own by programming may be time-consuming. For people without any coding skills, this would be a hard task. Therefore, I’d like to introduce some crawler tools.

Octoparse

Octoparse is a powerful visual windows-based web data crawler. It is really easy for users to grasp this tool with its simple and friendly user interface. To use it, you need to download this application on your local desktop.

As the figure shown below, you can click-and-drag the blocks in the Workflow Designer pane to customize your own task. Octoparse provides two editions of crawling service subscription plans – the Free Edition and Paid Edition. Both can satisfy the basic scraping or crawling needs of users. With the Free Edition, you can run your tasks on the local side.

If you switch your free edition to a Paid Edition, you can use the Cloud-based service by uploading your tasks to the Cloud Platform. 6 to 14 cloud servers will run your tasks simultaneously with a higher speed and crawl in a larger scale. Plus, you can automate your data extraction leaving without a trace using Octoparse’s anonymous proxy feature that could rotate tons of IPs, which will prevent you from being blocked by certain websites. Here’s a video introducing Octoparse Cloud Extraction.

Octoparse also provides API to connect your system to your scraped data in real-time. You can either import the Octoparse data into your own database or use the API to require access to your account’s data. After you finish the configuration of the task, you can export data into various formats, like CSV, Excel, HTML, TXT, and database (MySQL, SQL Server, and Oracle).

is also known as a web crawler covering all different levels of crawling needs. It offers a Magic tool which can convert a site into a table without any training sessions. It suggests users to download its desktop app if more complicated websites need to be crawled. Once you’ve built your API, they offer a number of simple integration options such as Google Sheets,, Excel as well as GET and POST requests. When you consider that all this comes with a free-for-life price tag and an awesome support team, is a clear first port of call for those on the hunt for structured data. They also offer a paid enterprise-level option for companies looking for more large scale or complex data extraction.

Mozenda

Mozenda is another user-friendly web data extractor. It has a point-and-click UI for users without any coding skills to use. Mozenda also takes the hassle out of automating and publishing extracted data. Tell Mozenda what data you want once, and then get it however frequently you need it. Plus, it allows advanced programming using REST API the user can connect directly with Mozenda account. It provides the Cloud-based service and rotation of IPs as well.

ScrapeBox

SEO experts, online marketers and even spammers should be very familiar with ScrapeBox with its very user-friendly UI. Users can easily harvest data from a website to grab emails, check page rank, verify working proxies and RSS submission. By using thousands of rotating proxies, you will be able to sneak on the competitor’s site keywords, do research on sites, harvesting data, and commenting without getting blocked or detected.

Google Web Scraper Plugin

If people just want to scrape data in a simple way, I suggest you choose the Google Web Scraper Plugin. It is a browser-based web scraper that works like Firefox’s Outwit Hub. You can download it as an extension and have it installed in your browser. You need to highlight the data fields you’d like to crawl, right-click and choose “Scrape similar…”. Anything that’s similar to what you highlighted will be rendered in a table ready for export, compatible with Google Docs. The latest version still had some bugs on spreadsheets. Even though it is easy to handle, notice to all users, it can’t scrape images and crawl data in a large amount.

Artículo en español: 3 Mejores Formas de Crawl Datos desde WebsiteTambién puede leer artículos de web scraping en el Website Oficial

Artikel auf Deutsch: Die 3 besten Methoden zum Crawlen von Daten aus einer WebsiteSie können unsere deutsche Website besuchen.

Author: The Octoparse Team

Top 20 Web Scraping Tools to Scrape the Websites Quickly

Top 30 Big Data Tools for Data Analysis

Web Scraping Templates Take Away

How to Build a Web Crawler – A Guide for Beginners

Video: Create Your First Scraper with Octoparse 7. X

Frequently Asked Questions about crawl data

Is crawling data legal?

If you’re doing web crawling for your own purposes, it is legal as it falls under fair use doctrine. The complications start if you want to use scraped data for others, especially commercial purposes. … As long as you are not crawling at a disruptive rate and the source is public you should be fine.Jul 17, 2019

How do you use crawl data?

Best 3 Ways to Crawl Data from a WebsiteUse Website APIs. Many large social media websites, like Facebook, Twitter, Instagram, StackOverflow provide APIs for users to access their data. … Build your own crawler. However, not all websites provide users with APIs. … Take advantage of ready-to-use crawler tools.Sep 8, 2021

What is the meaning of data crawling in Internet?

A web crawler (also known as a web spider or web robot) is a program or automated script which browses the World Wide Web in a methodical, automated manner. This process is called Web crawling or spidering. Many legitimate sites, in particular search engines, use spidering as a means of providing up-to-date data.