Web Scraping 101: 10 Myths that Everyone Should Know

1. Web Scraping is illegal

Many people have false impressions about web scraping. It is because there are people don’t respect the great work on the internet and use it by stealing the content. Web scraping isn’t illegal by itself, yet the problem comes when people use it without the site owner’s permission and disregard of the ToS (Terms of Service). According to the report, 2% of online revenues can be lost due to the misuse of content through web scraping. Even though web scraping doesn’t have a clear law and terms to address its application, it’s encompassed with legal regulations. For example:

Violation of the Computer Fraud and Abuse Act (CFAA)

Violation of the Digital Millennium Copyright Act (DMCA)

Trespass to Chattel

Misappropriation

Copy right infringement

Breach of contract

Photo by Amel Majanovic on Unsplash

2. Web scraping and web crawling are the same

Web scraping involves specific data extraction on a targeted webpage, for instance, extract data about sales leads, real estate listing and product pricing. In contrast, web crawling is what search engines do. It scans and indexes the whole website along with its internal links. “Crawler” navigates through the web pages without a specific goal.

3. You can scrape any website

It is often the case that people ask for scraping things like email addresses, Facebook posts, or LinkedIn information. According to an article titled “Is web crawling legal? ” it is important to note the rules before conduct web scraping:

Private data that requires username and passcodes can not be scrapped.

Compliance with the ToS (Terms of Service) which explicitly prohibits the action of web scraping.

Don’t copy data that is copyrighted.

One person can be prosecuted under several laws. For example, one scraped some confidential information and sold it to a third party disregarding the desist letter sent by the site owner. This person can be prosecuted under the law of Trespass to Chattel, Violation of the Digital Millennium Copyright Act (DMCA), Violation of the Computer Fraud and Abuse Act (CFAA) and Misappropriation.

It doesn’t mean that you can’t scrape social media channels like Twitter, Facebook, Instagram, and YouTube. They are friendly to scraping services that follow the provisions of the file. For Facebook, you need to get its written permission before conducting the behavior of automated data collection.

4. You need to know how to code

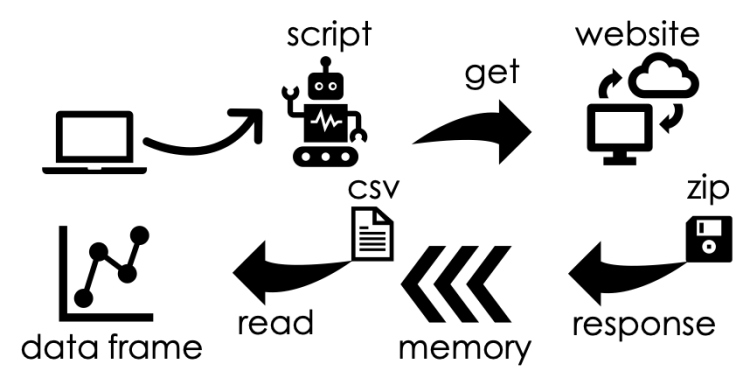

A web scraping tool (data extraction tool) is very useful regarding non-tech professionals like marketers, statisticians, financial consultant, bitcoin investors, researchers, journalists, etc. Octoparse launched a one of a kind feature – web scraping templates that are preformatted scrapers that cover over 14 categories on over 30 websites including Facebook, Twitter, Amazon, eBay, Instagram and more. All you have to do is to enter the keywords/URLs at the parameter without any complex task configuration. Web scraping with Python is time-consuming. On the other side, a web scraping template is efficient and convenient to capture the data you need.

5. You can use scraped data for anything

It is perfectly legal if you scrape data from websites for public consumption and use it for analysis. However, it is not legal if you scrape confidential information for profit. For example, scraping private contact information without permission, and sell them to a 3rd party for profit is illegal. Besides, repackaging scraped content as your own without citing the source is not ethical as well. You should follow the idea of no spamming, no plagiarism, or any fraudulent use of data is prohibited according to the law.

Check Below Video: 10 Myths About Web Scraping!

6. A web scraper is versatile

Maybe you’ve experienced particular websites that change their layouts or structure once in a while. Don’t get frustrated when you come across such websites that your scraper fails to read for the second time. There are many reasons. It isn’t necessarily triggered by identifying you as a suspicious bot. It also may be caused by different geo-locations or machine access. In these cases, it is normal for a web scraper to fail to parse the website before we set the adjustment.

Read this article: How to Scrape Websites Without Being Blocked in 5 Mins?

7. You can scrape at a fast speed

You may have seen scraper ads saying how speedy their crawlers are. It does sound good as they tell you they can collect data in seconds. However, you are the lawbreaker who will be prosecuted if damages are caused. It is because a scalable data request at a fast speed will overload a web server which might lead to a server crash. In this case, the person is responsible for the damage under the law of “trespass to chattels” law (Dryer and Stockton 2013). If you are not sure whether the website is scrapable or not, please ask the web scraping service provider. Octoparse is a responsible web scraping service provider who places clients’ satisfaction in the first place. It is crucial for Octoparse to help our clients get the problem solved and to be successful.

8. API and Web scraping are the same

API is like a channel to send your data request to a web server and get desired data. API will return the data in JSON format over the HTTP protocol. For example, Facebook API, Twitter API, and Instagram API. However, it doesn’t mean you can get any data you ask for. Web scraping can visualize the process as it allows you to interact with the websites. Octoparse has web scraping templates. It is even more convenient for non-tech professionals to extract data by filling out the parameters with keywords/URLs.

9. The scraped data only works for our business after being cleaned and analyzed

Many data integration platforms can help visualize and analyze the data. In comparison, it looks like data scraping doesn’t have a direct impact on business decision making. Web scraping indeed extracts raw data of the webpage that needs to be processed to gain insights like sentiment analysis. However, some raw data can be extremely valuable in the hands of gold miners.

With Octoparse Google Search web scraping template to search for an organic search result, you can extract information including the titles and meta descriptions about your competitors to determine your SEO strategies; For retail industries, web scraping can be used to monitor product pricing and distributions. For example, Amazon may crawl Flipkart and Walmart under the “Electronic” catalog to assess the performance of electronic items.

10. Web scraping can only be used in business

Web scraping is widely used in various fields besides lead generation, price monitoring, price tracking, market analysis for business. Students can also leverage a Google scholar web scraping template to conduct paper research. Realtors are able to conduct housing research and predict the housing market. You will be able to find Youtube influencers or Twitter evangelists to promote your brand or your own news aggregation that covers the only topics you want by scraping news media and RSS feeds.

Source:

Dryer, A. J., and Stockton, J. 2013. “Internet ‘Data Scraping’: A Primer for Counseling Clients, ” New York Law Journal. Retrieved from

Is Web Scraping Illegal? Depends on What the Meaning of the …

Depending on who you ask, web scraping can be loved or hated.

Web scraping has existed for a long time and, in its good form, it’s a key underpinning of the internet. “Good bots” enable, for example, search engines to index web content, price comparison services to save consumers money, and market researchers to gauge sentiment on social media.

“Bad bots, ” however, fetch content from a website with the intent of using it for purposes outside the site owner’s control. Bad bots make up 20 percent of all web traffic and are used to conduct a variety of harmful activities, such as denial of service attacks, competitive data mining, online fraud, account hijacking, data theft, stealing of intellectual property, unauthorized vulnerability scans, spam and digital ad fraud.

So, is it Illegal to Scrape a Website?

So is it legal or illegal? Web scraping and crawling aren’t illegal by themselves. After all, you could scrape or crawl your own website, without a hitch.

Startups love it because it’s a cheap and powerful way to gather data without the need for partnerships. Big companies use web scrapers for their own gain but also don’t want others to use bots against them.

The general opinion on the matter does not seem to matter anymore because in the past 12 months it has become very clear that the federal court system is cracking down more than ever.

Let’s take a look back. Web scraping started in a legal grey area where the use of bots to scrape a website was simply a nuisance. Not much could be done about the practice until in 2000 eBay filed a preliminary injunction against Bidder’s Edge. In the injunction eBay claimed that the use of bots on the site, against the will of the company violated Trespass to Chattels law.

The court granted the injunction because users had to opt in and agree to the terms of service on the site and that a large number of bots could be disruptive to eBay’s computer systems. The lawsuit was settled out of court so it all never came to a head but the legal precedent was set.

In 2001 however, a travel agency sued a competitor who had “scraped” its prices from its Web site to help the rival set its own prices. The judge ruled that the fact that this scraping was not welcomed by the site’s owner was not sufficient to make it “unauthorized access” for the purpose of federal hacking laws.

Two years later the legal standing for eBay v Bidder’s Edge was implicitly overruled in the “Intel v. Hamidi”, a case interpreting California’s common law trespass to chattels. It was the wild west once again. Over the next several years the courts ruled time and time again that simply putting “do not scrape us” in your website terms of service was not enough to warrant a legally binding agreement. For you to enforce that term, a user must explicitly agree or consent to the terms. This left the field wide open for scrapers to do as they wish.

Fast forward a few years and you start seeing a shift in opinion. In 2009 Facebook won one of the first copyright suits against a web scraper. This laid the groundwork for numerous lawsuits that tie any web scraping with a direct copyright violation and very clear monetary damages. The most recent case being AP v Meltwater where the courts stripped what is referred to as fair use on the internet.

Previously, for academic, personal, or information aggregation people could rely on fair use and use web scrapers. The court now gutted the fair use clause that companies had used to defend web scraping. The court determined that even small percentages, sometimes as little as 4. 5% of the content, are significant enough to not fall under fair use. The only caveat the court made was based on the simple fact that this data was available for purchase. Had it not been, it is unclear how they would have ruled. Then a few months back the gauntlet was dropped.

Andrew Auernheimer was convicted of hacking based on the act of web scraping. Although the data was unprotected and publically available via AT&T’s website, the fact that he wrote web scrapers to harvest that data in mass amounted to “brute force attack”. He did not have to consent to terms of service to deploy his bots and conduct the web scraping. The data was not available for purchase. It wasn’t behind a login. He did not even financially gain from the aggregation of the data. Most importantly, it was buggy programing by AT&T that exposed this information in the first place. Yet Andrew was at fault. This isn’t just a civil suit anymore. This charge is a felony violation that is on par with hacking or denial of service attacks and carries up to a 15-year sentence for each charge.

In 2016, Congress passed its first legislation specifically to target bad bots — the Better Online Ticket Sales (BOTS) Act, which bans the use of software that circumvents security measures on ticket seller websites. Automated ticket scalping bots use several techniques to do their dirty work including web scraping that incorporates advanced business logic to identify scalping opportunities, input purchase details into shopping carts, and even resell inventory on secondary markets.

To counteract this type of activity, the BOTS Act:

Prohibits the circumvention of a security measure used to enforce ticket purchasing limits for an event with an attendance capacity of greater than 200 persons.

Prohibits the sale of an event ticket obtained through such a circumvention violation if the seller participated in, had the ability to control, or should have known about it.

Treats violations as unfair or deceptive acts under the Federal Trade Commission Act. The bill provides authority to the FTC and states to enforce against such violations.

In other words, if you’re a venue, organization or ticketing software platform, it is still on you to defend against this fraudulent activity during your major onsales.

The UK seems to have followed the US with its Digital Economy Act 2017 which achieved Royal Assent in April. The Act seeks to protect consumers in a number of ways in an increasingly digital society, including by “cracking down on ticket touts by making it a criminal offence for those that misuse bot technology to sweep up tickets and sell them at inflated prices in the secondary market. ”

In the summer of 2017, LinkedIn sued hiQ Labs, a San Francisco-based startup. hiQ was scraping publicly available LinkedIn profiles to offer clients, according to its website, “a crystal ball that helps you determine skills gaps or turnover risks months ahead of time. ”

You might find it unsettling to think that your public LinkedIn profile could be used against you by your employer.

Yet a judge on Aug. 14, 2017 decided this is okay. Judge Edward Chen of the U. S. District Court in San Francisco agreed with hiQ’s claim in a lawsuit that Microsoft-owned LinkedIn violated antitrust laws when it blocked the startup from accessing such data. He ordered LinkedIn to remove the barriers within 24 hours. LinkedIn has filed to appeal.

The ruling contradicts previous decisions clamping down on web scraping. And it opens a Pandora’s box of questions about social media user privacy and the right of businesses to protect themselves from data hijacking.

There’s also the matter of fairness. LinkedIn spent years creating something of real value. Why should it have to hand it over to the likes of hiQ — paying for the servers and bandwidth to host all that bot traffic on top of their own human users, just so hiQ can ride LinkedIn’s coattails?

I am in the business of blocking bots. Chen’s ruling has sent a chill through those of us in the cybersecurity industry devoted to fighting web-scraping bots.

I think there is a legitimate need for some companies to be able to prevent unwanted web scrapers from accessing their site.

In October of 2017, and as reported by Bloomberg, Ticketmaster sued Prestige Entertainment, claiming it used computer programs to illegally buy as many as 40 percent of the available seats for performances of “Hamilton” in New York and the majority of the tickets Ticketmaster had available for the Mayweather v. Pacquiao fight in Las Vegas two years ago.

Prestige continued to use the illegal bots even after it paid a $3. 35 million to settle New York Attorney General Eric Schneiderman’s probe into the ticket resale industry.

Under that deal, Prestige promised to abstain from using bots, Ticketmaster said in the complaint. Ticketmaster asked for unspecified compensatory and punitive damages and a court order to stop Prestige from using bots.

Are the existing laws too antiquated to deal with the problem? Should new legislation be introduced to provide more clarity? Most sites don’t have any web scraping protections in place. Do the companies have some burden to prevent web scraping?

As the courts try to further decide the legality of scraping, companies are still having their data stolen and the business logic of their websites abused. Instead of looking to the law to eventually solve this technology problem, it’s time to start solving it with anti-bot and anti-scraping technology today.

Get the latest from imperva

The latest news from our experts in the fast-changing world of application, data, and edge security.

Subscribe to our blog

Keep in mind that companies have *sued* for scraping not through the …

On the ethics side, I don’t scrape large amounts of data – eg. giving clients lead gen (x leads for y dollars) – in fact, I have never done a scraping job and don’t intend to do those jobs for me it’s purely for personal use and my little side projects. I don’t even like the word scraping because it comes loaded with so many negative connotations (which sparked this whole comment thread) – and for a good reason – it’s reflective of how the the demand in the market. People want cheap leads to spam, and that’s bad use of nerally I tend to focus more on words and phases like ‘automation’ and ‘scripting a bot’. I’m just automating my life, I’m writing a bot to replace what I would have to do on a daily basis – like looking on Facebook for some gifs and videos then manually posting them to my site. Would I spend an hour each and every day doing this? No, I’m much more lazier than is anyone to tell me what I can and can’t automate in my life?

This is exactly my response to “you can’t legally scrape my site because of TOS. ” I don’t think anyone has a legal right to tell me HOW I use their service. Making “browsing my website using a script you wrote yourself” illegal is akin to “You cannot use the tab key to tab between fields on my website, you must only use the touchpad to move the cursor over each field individually. “It’s baloney.

Under the CFAA, they do have the right to determine what constitutes authorized access. If they say you’re unauthorized for using the wrong buttons on your mouse, then you’re unauthorized. It’s treated very similarly to trespass on private can try telling the judge it’s baloney, but if he’s going by current precedent, he probably won’t agree with you.

It is not the same flavor of violation as trespassing on land, more like riding a bicycle on a sidewalk. Fortunately, I’m not in the business of scraping sites, but I still find this legal precedent abhorable, and I hope it gets struck down in court when push comes to shove. I would certainly vote that way if given the chance.

It’s like riding a red mountain bike on a bike path with a small sign saying “only black road bikes allowed”.

> Who is anyone to tell me what I can and can’t automate in my life? You are exactly right. But although a site can deny you access for any arbitrary reason (it’s their website, after all) obviously government think they are the ones to enforce this if the ToS say you can only access a site while jumping hoops? Only read the ToS after a while and wasn’t hooping? Well too bad, now you are being sued for reading the main page _and_ the ToS page without jumping comment Terms of Service: If you read any of this text you owe lerpa $1. 000. 000 to be paid up until 09/01/2016.

It would be ok if it wasn’t “You can’t scrap my site. Unless of course you’re Google” this double standard drives me mad.

As the owner of a large website, I don’t care what you think. I block by default and whitelist when I decide it’s in my you don’t think this is reasonable, chances are you’ve never run a large website, or analyzed the logs of a large website. You’d be astonished how much robotic activity you’ll receive. If left unchecked it can easily swamp legitimate you have a way for me to automatically identify “honourable” scrapers such as yourself as distinct from the thousands upon thousands of extremely dodgy scrapers from across the world, my policy shall remain.

As the user of large websites I don’t care. I’m not going to read the TOS and I will continue to scrape what I like since it makes my life more convenient. Like OP when blocked I’ll just drive my scraping through a web browser which is the same as I’ve done for years on various sites that never provided APIs.

“As the user of large websites I don’t care”. Are you sure? Do you want your OK Cupid or LinkedIn profile to be crossposted on another website without your knowledge.

If you don’t want your data public, then don’t make it public in the first place. That’s a good rule of thumb.

Putting it behind a signup page with terms that don’t allow sharing is not “making it public” while in the US that may “just” be treated as unauthorized access, in the EU, if you make the data public it’s also a violation of the Data Protection Directive, putting you at risk of prosecution in every EU country from which you have included may be right from a risk minimisation perspective. But for a lot of data the risk in the case of exposure is low enough that it is a totally valid risk management strategy to assume that legal protections will be a sufficient deterrent to prevent enough of the most blatant abuses.

Eh, not really. The Data Protection Directive doesn’t even apply here – if the first party (OKCupid) made it available to a third party (the scraper), then the first party can be held in violation, but not the third party.

If you have control of personally identifiable data, it’s likely that at least some of the EU data protection rules will apply to you regardless of how you got it.

Yes but as you say, they apply regardless. More specifically, they apply to data that you have (and are storing), not the act of obtaining a private individual it’s not hard to comply either, for private use. If you publish it, it becomes a different story, because it’s PII. And, as soon as it’s in possession of a company, they need to comply with more rules about securely storing it, etc. (this isn’t enforced very well, though). Private individuals can’t be held to that because there’s (in theory) no legal way to check it.

Why is it a double standard? Google scraping usually benefits the site with increased traffic and revenue, in a way most other scraping does not. Saying “you can scrape me if it benefits me” isn’t totally in keeping with the principles of the open web, but it’s not hypocritical.

With a risk of stating the obvious, this is a double standard simply because there are two standards – one for Google and one for others. I can’t speak for the poster you were replying to, but whilst I see it as logical self-interested behaviour by site owners, it still feels unfair.

There isn’t: the function for this standard includes expected benefit as an input. Every standard has inputs, so that certainly isn’t the quality for making something a double standard. The only remaining quality is how unfair it feels, so it would probably be better to just address that, since it is obviously the only thing you disagree about.

With that logic decreased wages for women are not a double standard due to the potential for maternity leave affecting their output at is a double standard plain and simple, and a very dangerous one at that.

Your example is a case of discrimination, but the economic rationale is unquestionable. There is a tremendous upfront cost for new employees, who are not valuable contributors for some lengthy ramp up period and furthermore accrue experience over the course of employment. So the lifetime value curve for any given employee is typically skewed left.

I think I’m just being point was that when you call something a double standard, you’re arguing two things of equal value have been judged differently under the same standard. But by acknowledging they’ve been judged differently, you’re acknowledging that there is a judgement, a standard, that applies the same to both, and produces the results you object to. What you really object to is the fairness of the qualities checked by the the outcome of calling things that, vs calling them a double standard is the same, I think most people already know and have no trouble with this. My protests were could gain value if there were certain whitelisted judgable aspects (like expected value), and judgements that aren’t based on things from the whitelist are considered outside the scope of a standard. Then, calling the standard unfair and calling it a double standard would have a different meaning (if only in some contrived way, since any aspect is just an argument away from the whitelist)

It’s their site they can block whatever they want. The problem is the stupid far reaching conclusion that this is normal trespassing laws are way too overreaching (see how it is handled in the UK for a saner example) but now you have the amazing possibility of remote fun part is that it’s just a matter of someone hiding something that says you cannot access the site in a place that you have to access the site in order to read — the ToS. Suing people over this is real problem is the involvement of Govt, and this kind of absurdity regarding ToS, EULAs and so on, is something that has been going on for decades. If you have the money you can make Govt your personal watch dogs.

Whether or not it technically qualifies as a “double standard, ” in practice I don’t see anything inherently unfair about a stranger enters my house without my permission, that’s trespassing. But there’s nothing unfair about letting in someone who I invite over.

That’s a terrible analogy. Your home is private, websites are not. The fact is that websites are posted online for all to see, so it’s more like saying certain people at a park may take pictures while others are not allowed. That’s unfair. If everyone could take pictures, it would be fair. Yes, someone with an old bright bulb camera might be annoying people, but nobody said “fair” meant all players would be nice or that having a “fair” policy would somehow be more beneficial to the website owner. It’s not, that’s why site owners are selective. So they have a double standard, but it’s for their benefit, not that of the site visitors (be they human or bot).

How about the analogy of an art gallery disallowing photography? Is the gallery being hypocritical when they allow the local paper to take photos for publicity, or when they permit an archivist that has a known reputation to take photos for archival purposes?

You can still deal with the old bright bulb cameras: you can have rules which apply to everyone. So you can make a rule at the park that pictures are allowed, but only without flash, or that only digital cameras are allowed, or only digital cameras with the fake-shutter noises turned off, etc. As long as the rule applies to everyone equally, it’s fair, even if you think the rule is websites, it’s not fair to have different rules for Google than others. What would be fair is some kind of rule about how often visitors can visit, how much they’re allowed to download, rsonally, though, I think all this is total BS. Sites are open to the public, but they also serve the whims of their owners. If the site wants to prevent access to people from a certain IP range, that should be their right. If they don’t want any scrapers, that should be their right too, or if they want to allow Google and not anyone else, that should also be their right. What isn’t right is that they can use the government to enforce these arbitrary rules. If they want to block my scraper, that’s fine, if they can do it on their end technologically. If they want to block my IP, they can do that too. But suing me or having the cops come to my door because they’re too incompetent or lazy to do these things technologically is unacceptable. The role of government is not to enforce arbitrary policies made up by business owners.

Using the law to block crawlers is more like saying:1. Google can come in2. Other Americans can’t come in3. Chinese people can come in (or anywhere else where US laws don’t apply)It might not be unfair, but it is certainly pointless and arbitrary.

To be fair, many companies which take anti-scraping seriously will also take inputs like geographic origin of a request into consideration when applying request throttling and filtering.

Google is basically algorithms built on top of a scraping service. It’s unfair to competitors (and potential disruptors) to restrict access to data that Google can fetch without limits.

Exactly, market scrappers as search all smart websites should include a ToS that says you are not allowed to access their data, so they can sue for trespassing anyone that they don’t like far reaching of government into this, and also the pirating stuff (which I do not condone but think that arresting people for that is waay too much) is what makes me want for the system to collapse under it’s own weight. Like some website suing members of congress for visiting it while violating the ToS in this case. I also secretly wanted Oracle to win vs Google so that cloning an API was piracy and that would extend to being a crime to purchase pirated goods which would make all clean room reverse engineering a criminal activity. That would lead to anyone that uses a PC without an authentic IBM BIOS (look up Phoenix BIOS) to be arrested, in theory, so even the US president would have to fall into that. It would have been a glorious shitstorm if Oracle won and IBM took that precedent to it’s logical implications, the computer world would have failed, and the law would either be made even more arbitrary or be fixed, but at least it would be shown how idiotic the state of affairs was.

Your idea about Oracle winning and society coming crashing to a halt is ridiculous and wouldn’t have happened. Your flaw is believing that the law and the government will work with logical precision, so that a flaw in the law will, like an infinite recursive loop in programming code, cause complete disaster. It doesn’t work that way. There’s plenty of cases where the law is clearly broken (see civil forfeiture vs. the 4th Amendment to the US Constitution), yet nothing is done. That’s because the government is run by humans, and they’ll enforce things the way they want. Double standards happen all the time with law, and it takes big, expensive court cases to sort them out, and of course that only happens when some moneyed interest wants to fix it (which is why civil forfeiture is still a big thing–they’re not going after extremely wealthy people or corporations with it). While IBM is certainly large enough to bring a big case like you suggested, the US government is far bigger and can simply invent a legal way of ignoring them, just as was done when the SCOTUS decided to rule in favor of using Eminent Domain to seize private property to hand over to commercial interests.

because i may also come to the point where i am a direct competitor to google, but i will never get there because i can’t scrap any site like they next argument may very well be a very racist one with the very same excuse you used above.

And if you have some way to identify yourself as a potential competitor to google and not some jackass trying to scrape email addresses or spam comments forms, I’m all ears.

A majority of the websites that blekko, a google competitor, contacted to ask for access ignored us.

There are worse barriers to entry for a search engine! DMCA take-downs… Right to be forgotten… click history…

Worse is that Google tries to stop scraping. It’s like they don’t want anyone to see past the first page of could scrape your website and then they prevent you form scraping your own data whole process is silly; it reflects the duct tape and chicken wire nature of the one should have to “scrape” or “crawl” should be put into a open universal format (no tags) and submitted when necessary (rsynced) to a public access archive, mirrored around the to bridge the gap until we reach a more content addressable system (cf. location based). Clients (text readers, media players, whatever) can download and transform the universally formatted data into markup, binary, etc. — whatever they wish, but all the design creativity and complexity of “web pages” or “web apps” can be handled at the network edge, client-side. “Crawling” should not be one should have to store HTML tags and other window dressing for on.

That’s the antithesis of the world wide web because you’ve just centralised data storage, which makes someone ‘own’ the

I do not understand your give an example, there is a lot of free open source software mirrored all over the internet, mostly on ftp servers, but also on, rsync, you use Linux or BSD you probably are using some of this software. If you use the www, then you are probably accessing computers that use this software. If you drive a new Mercedes you are probably using some of this software. There are a lot of copies of this code in a lot of that centralized? Does anyone hosting a mirror (“repository”) “own” the software? Is it the same person or entity hosting every mirror? Compare Google’s copies of everyone else’s data, also replicated in a lot of places around the world. Who “owns” this data?

Double standard? The difference is that Google Bot is built on being unobtrusive. I can easily built a scraper that will quickly ddos a site. Linkedin for they allow 10, 000 people to send 100 scraping requests per second everyday then that is stolen bandwidth that Linkedin has to pay for and the scrapers get free data. The difference is that Google has standards in which site’s unusually benefit from, not to mention that they allow for you to disallow their bot. It just doesn’t work the same way with some random developer building a scraper.

I agree that Googlebot is well behaved. When it detects your site is slowing down, it will back itself off. Unfortunately, this is often to your my experience, on a large site, Google will often slurp as much as you let it, upwards of hundreds of pages per second.

Google usually does 300 pages a minute on my site. In total, bots were loading about 1, 000 pages a minute.

That is a lot! It’s also still an order of magnitude less than big content sites. Not taking anything away from what must be a successful website to get a consistent 300 pages/minute crawl rate, but only to illustrate magnitude.

I was curious, so I just checked the stats through webmaster tools. For the last 90 days, the low is 450, 000 daily crawled pages, average is 650, 000, and yesterday was the high of 1, 130, 000 (780 per minute). Ouch.

This particular site is top 5, 000 Alexa. The content changes every minute, and Google is fast at picking up those changes. The last cache of the homepage was 7 minutes ago from ‘s definitely a correlation between my sites’ Google rankings, their organic traffic, and their crawl rate. The other sites I run are Alexa top 30, 000 and top 100, 000. They all feature dynamically changing content, but Google is definitely using a higher crawl rate on my higher ranking sites. This isn’t a surprise though, Google has limited resources like everyone, and they’ll focus those resources in a way that provides the most If you’re talking about the correlation between daily ranking and daily crawl rate for an individual site, then no, I’m not aware of any patterns. For example, the graph is flat for organic traffic and total indexed pages, but the crawl rate jumps up and down as mentioned, and it doesn’t appear to relate on a daily basis.

This post is kind of crazy, aggrandizing bad behavior and misuse of other’s resources against their raping against the TOS is super bad netizen stuff, and I dont think people should be posting positive reviews of people doing this. Breaking captchas and the like is basically blackhat work and should be looked down upon, not congratulated as I see in this thread.

>Scraping against the TOS is super bad netizen stuff, and I dont think people should be posting positive reviews of people doing this. Breaking captchas and the like is basically blackhat work and should be looked down upon, not congratulated as I see in this raping, in my opinion, isn’t black hat unless you are actually affecting their service or stealing you are slamming the site with requests because of your scraping, yeah you need to knock it off. If you throttle your scraper in proportion to the size of their site, you aren’t really harming regards to “stealing info”, as long as you aren’t taking info and selling it as your own (which it seems OP is indeed doing), that is just;dr: Scraping isn’t bad / blackhat as long as you aren’t affecting their service or business.

> If you throttle your scraper in proportion to the size of their site, you aren’t really harming do you understand their site infrastructure to know whether you’re doing harm? It’s perfectly possible that your script somehow bypasses safeguards they had in place to deal with heavy usage, and now their database is locking unnecessarily.

Eh, this is pretty weak. Scrapers are no different from other browsing devices. The web speaks HTTP. There’s no reason that using another HTTP browser would cause any disparate impact just by virtue of not being a conventional desktop browser — you’ve thrown out a pretty absurd hypothetical. In fact, scrapers usually cause less impact because they usually don’t download images or execute JavaScript. I did an analysis and a session browsed with my specialized browser would always consume less than 100K of bandwidth (and often far less), whereas a session browsed with a conventional desktop browser would consume at least 1. 2 MB, even if everything was cached, and sometimes up to 5 MB. In addition, on the desktop, a JavaScript heartbeat was sent back every few seconds, so all of that data was conserved cause we were a specialized browser used by people looking for a very specific piece of data, we could employ caching mechanisms that meant that each person could get their request fulfilled without having to hit the data source’s servers. We also had a regular pacing algorithm that meant our users were contacting the site way less than they would’ve been if they were using a conventional desktop service saved the data source a large amount of resource cost. When we were shut down, their site struggled for about two weeks to return to stability. I think they had anticipated the opposite service also saved our users a large amount of time. We were accessing publicly-available factual data that was not copyrightable (but only available from this one source’s site). There’s no reason that the user should be able to choose between Firefox and Chrome but not a task-specialized is true that some people will (usually accidentally) cause a DDoS with scrapers because the target site is not properly configured, but the same thing could be done with desktop browsers. It doesn’t mean that scrapers should be disadvantaged.

A small counterpoint to this — in the airline industry, it’s relatively commonplace for seat reservations to be made for a user _before_ payment has occurred. In this case, if you’re mirroring normal browser activity, you can (temporarily) reduce availability on a flight, potentially even bumping up the price for other, legitimate users, and almost certainly causing the airline to incur costs beyond normal bandwidth and server costs. I’m sure there are many other domains for which this is also the case, however rare.

If they don’t do the seat reservation behind a POST, or at least blacklist the reservation page in, I have no sympathy.

I’ve had this happen regularly enough that I developed the habit of finding a fare in the morning and then returning at 11pm ready to buy.

Cinemas and some online shops do the same. I’ve always wondered if it’s possible to block any tickets sales for entire flights/screenings/products this if airline tickets are based on supply v demand, it might even be possible to drive down ticket prices by suddenly dropping a load of blocks near to the flight date.

If you have ever tried to buy hot tickets online and not been able to get any, this is due to bots. Bots are the scalpers can easily be prevented by requiring ID matching the ticket on entry, but the ticket sellers often don’t seem to care.

> you’ve thrown out a pretty absurd hypotheticalNot even remotely absurd. Where is the data your scraper consuming coming from? It’s almost always served from some sort of data repository (SQL or otherwise). That data costs far more per MB to serve up quickly than JS/CSS/ppose, for example, you host a blogging platform that has one very popular user. Most accounts on your site don’t get a ton of visitors, and that one very popular user’s post are all stored in along comes a scraper. He thinks, “Hey, this site is serving up a million page impressions a day. It can definitely handle me scraping the site” when he runs the scraper, he fills up the cache with a ton of data that it doesn’t need, causing cache evictions and general performance degradation for everyone else.

There are already 6-8 major scrapers that do this constantly, across the whole internet, called search engines. You can’t handle that? What if you get a normal user who says “Hey, I wanna see some of the lesser known authors on this platform” and opens up a hundred tabs with rarely-read blogs? What if you get 10 users who decide to do that on the same day? Is it reasonable to sue them? Should there be a legal protection to punish them for making your site slow? Don’t blame the user for your scaling issues. If the optimized browser (“scraper”) isn’t hammering your site at a massively unnatural interval, it’s clean. And if it is, you should have server-side controls that prevent one client from asking for too much are just normal problems that are part of being on the web. It’s not fair to pin it on non-malicious users, even if they’re not using a conventional desktop browser.

First, search engines are scrapers. No need to make a, search engines don’t always respect They sometimes do. Even Google itself says it may still contact a page that has disallowed it. [0]Third, is just a convention. There’s no reason to assume it has any binding authority. Users should be able to access public HTTP resources with any non-disruptive HTTP client, regardless of the end server’s opinion. [0] “You should not use as a means to hide your web pages from Google Search results. This is because other pages might point to your page, and your page could get indexed that way, avoiding the file. ” /

In the Google quote you link to, Google is not contacting your page. Rather, Google will index pages that are only linked to, which it has never crawled, and will serve up those pages if the link text matches your query. That’s how you get those search results where the snippet is “A description of this page has been blocked by ” or ‘s a somewhat related issue where to ensure your site never exists in Google, you actually need to allow it to be crawled, because the standard for that is a “” tag, and in order to see the meta noindex, the search engine has to fetch the page.

And the original point of my comment was that doing this is extremely rude and not appropriate, not that it couldn’t be done or that others weren’t doing free to send any request to any server you want, it is certainly up to them to decide whether or not to serve it, but that doesnt absolve you of guilt from scraping someone’s site when they explicitly ask you not to.

Please don’t conflate “extremely rude”, “not appropriate”, and “guilt”. Two of these are subjective opinions about what constitutes good citizenship. The last one is a legal determination that has the potential to deprive an individual of both his money and liberty. We’re discussing whether these behaviors should be legal, not whether they are necessarily polite.

I never are posting in a comment thread underneath my reply about rudeness and impoliteness, ironically being somewhat rude telling me off about what not to conflate when it was never what I said.

Google will put forbidden pages in its index. It doesn’t scrape them. (The URL to the page exists even without visiting the page. )

We do, and we also use our own user-agent string: ” site rating system”. A growing number of sites reject connections based on USER-AGENT string. Try “”, for example. (We list those as “blocked”). Some sites won’t let us read the “” file. In some cases, the site’s USER-AGENT test forbids things the “” allows. Another issue is finding the site’s preferred home page. We look at “” and “, both with HTTP and HTTPS, trying to find the entry point.

This just looks for redirects; it doesn’t even read the content. Some sites have redirects from one of those four options to another one. In some cases, the less favored entry point has a “disallow all” file. In some cases, the file itself is redirected. This is like having doors with various combinations of “Keep Out” and “Please use other door” signs. In that phase, we ignore “” but don’t read any content beyond the HTTP sites treat the four reads to find the home page as a denial of service attack and refuse connections for about a there’s Wix. Wix sometimes serves a completely different page if it thinks you’re a bot.

> I did an analysis and a session browsed with my specialized browser would always consume less than 100K of bandwidth (and often far less), whereas a session browsed with a conventional desktop browser would consume at least 1. In addition, on the desktop, a JavaScript heartbeat was sent back every few seconds, so all of that data was saved ndwidth is certainly part of it, but there’s also also database and app-server load (which may be the actual bottleneck) that a scraper isn’t necessarily bypassing.

Yeah, I just have a hard time buying that a scraper that does less than a conventional desktop browser is going to accidentally stumble across something that causes the server-side to flip out. I’m not really sure in what case your hypothetical is rapers are usually used to get publicly-available data more efficiently. What you’re describing would basically require the scraper to hammer an invisible endpoint somewhere, but there’s no reason the scraper would do that — it just wants to get the data displayed by the site in a more efficient manner. I suppose the browser could enforce a cooldown on an expensive callback via JavaScript, which a scraper would circumvent, but IMO that’s not a fair reason to say scrapers are disallowed; cooldowns should be enforced server-side. There’s no way to ensure that a user is going to execute your script. That’s just part of the deal. Everything about scrapers means less server load; no images, no wandering around the site trying to find the right place, no heavy JavaScript callbacks that invoke server-side application load, etc. Scrapers are just highly-optimized browsing devices targeting specific pieces of data; it’s logical that they would be cheaper to serve than a desktop user who’s concerned about aesthetics and the our specific case, those JavaScripts we didn’t download included instructions to make over 100 AJAX requests on every page load. No wonder users were looking for something more I agree that a scraper isn’t necessarily bypassing some load-heavy operations, but I find it highly implausible that a non-malicious scraper would be invoking operations that cause extra load (beyond just hitting the site too often). Frankly, I’d be surprised if there was a functional scraper that regularly invoked more resource cost per-session than a typical desktop browsing session to get equivalent data.

> What you’re describing would basically require the scraper to hammer an invisible endpoint somewhereThat wasn’t my point. My point was: a lot of a website’s costs are hidden from a web scraper (e. g. database load), so a scraper can’t claim, based on the variables they can observe (bandwidth), that they’re costing the website less than normal traffic. I was basically responding to statements like this:> In fact, scrapers usually cause less impact because they usually don’t download images or execute ‘s really no way for a scraper to know that unless the website tells them. Their usage pattern is different than typical users and raw bandwidth (for stuff like static images) may not matter to the website.

It’s true that there’s no way to know that for sure, but it doesn’t make sense that a scraper, by virtue of its being a scraper, is incurring additional load. A scraper is only making requests that a person with a desktop browser or any other appliance that speaks HTTP could make. What’s the difference between a user clicking the same button on the page 50 times or holding down F5 and a scraper that pings a page once a minute? Your argument is basically boiling down to “scrapers could hit one load-heavy endpoint too fast”, but so could desktop browsers. So I don’t see what it has to do with scraping.

> but it doesn’t make sense that a scraper, by virtue of its being a scraper, is incurring additional loadIt does, because scrapers don’t have normal usage patterns. They’re robots and behave like robots. > What’s the difference between a user clicking the same button on t

Frequently Asked Questions about data scraping legality

Is it legal to scrape data?

Web scraping and crawling aren’t illegal by themselves. After all, you could scrape or crawl your own website, without a hitch. … Big companies use web scrapers for their own gain but also don’t want others to use bots against them.

Can you get sued for scraping a website?

Yes but as you say, they apply regardless. More specifically, they apply to data that you have (and are storing), not the act of obtaining it. As a private individual it’s not hard to comply either, for private use. If you publish it, it becomes a different story, because it’s PII.

Is Web scraping for commercial use legal?

If you’re doing web crawling for your own purposes, it is legal as it falls under fair use doctrine. The complications start if you want to use scraped data for others, especially commercial purposes. … As long as you are not crawling at a disruptive rate and the source is public you should be fine.Jul 17, 2019