How to Scrape Amazon Product Data: Names, Pricing, ASIN, etc.

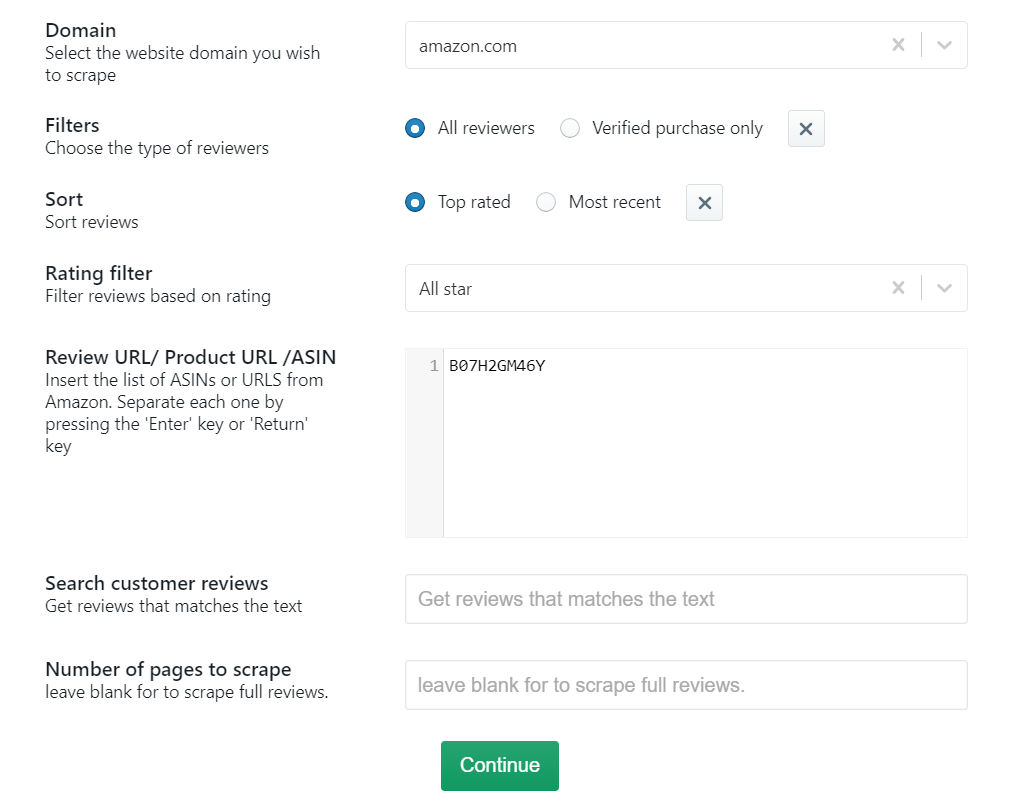

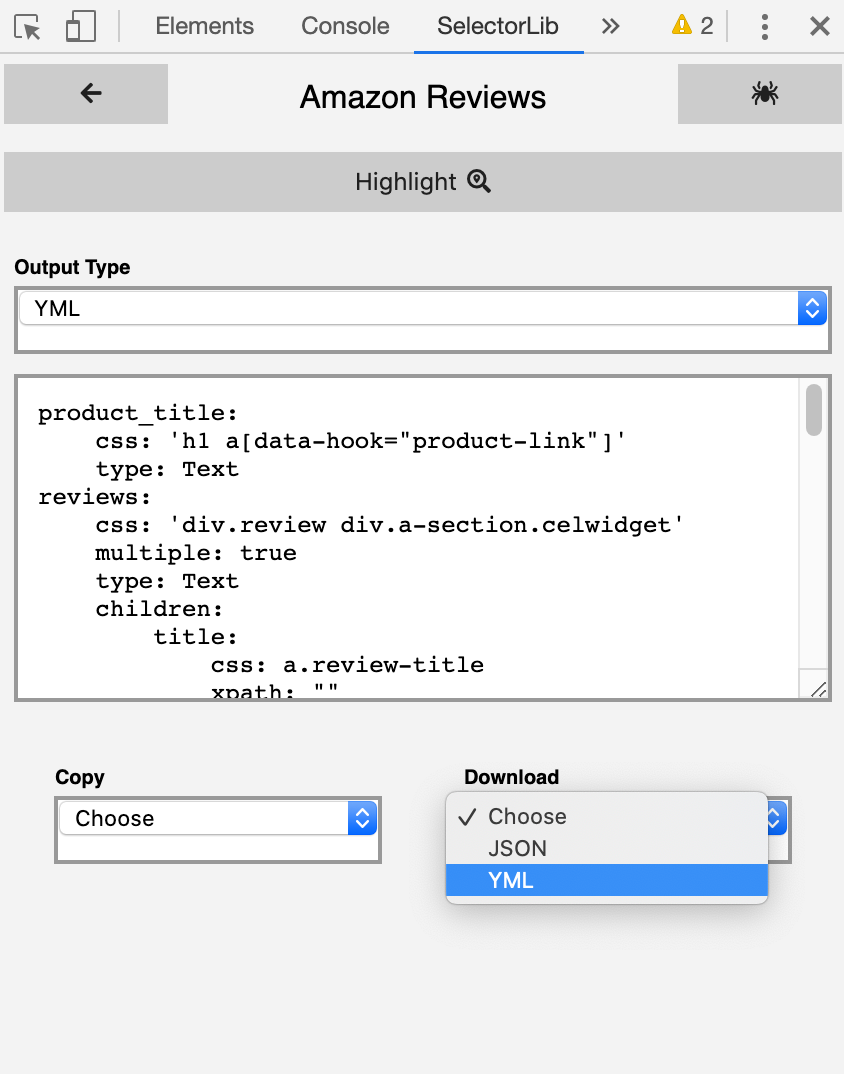

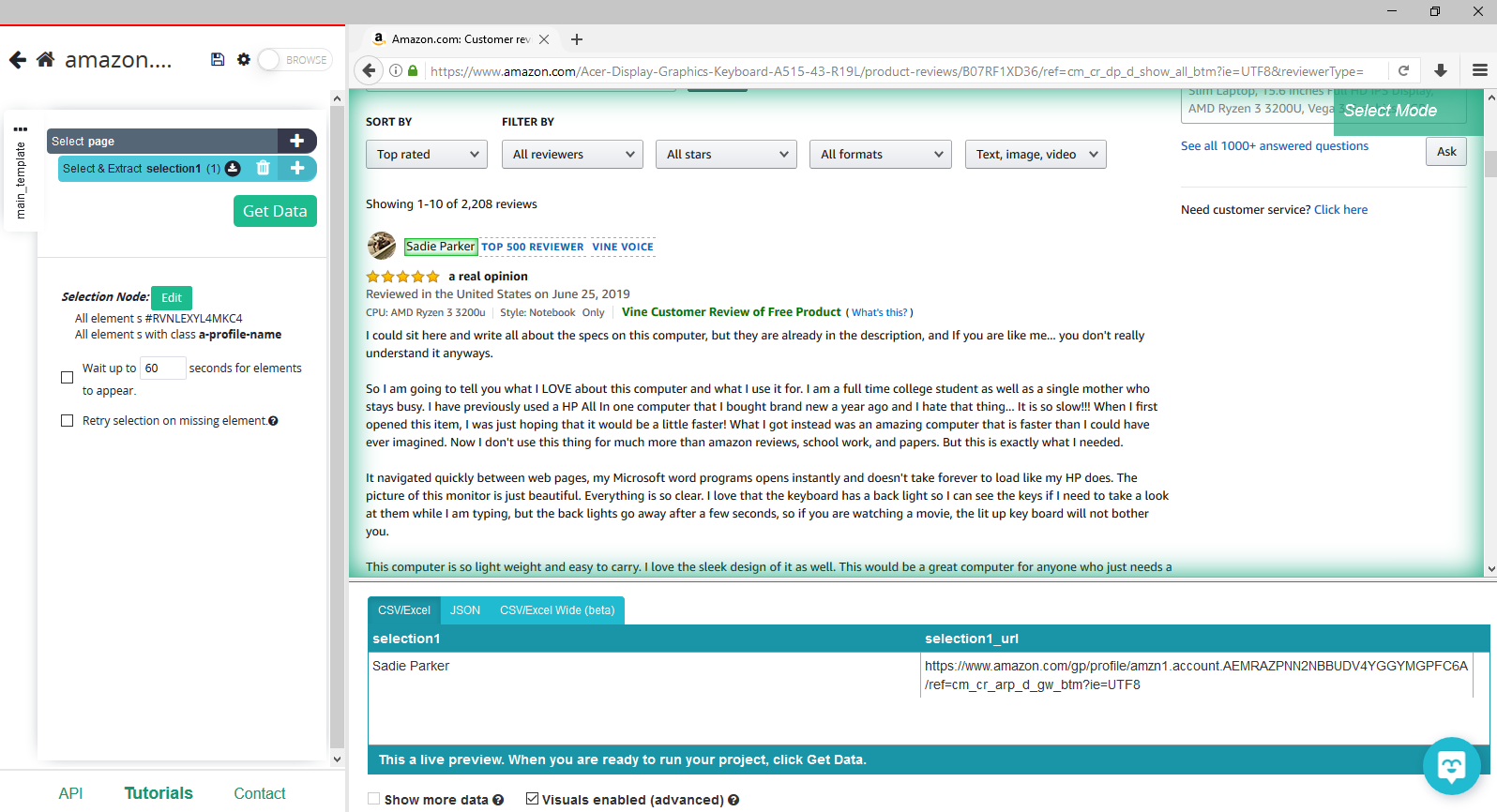

Amazon offers numerous services on their ecommerce thing they do not offer though, is easy access to their product ’s currently no way to just export product data from Amazon to a spreadsheet for any business needs you might have. Either for competitor research, comparison shopping or to build an API for your app scraping easily solves this Amazon Web ScrapingWeb scraping will allow you to select the specific data you’d want from the Amazon website into a spreadsheet or JSON file. You could even make this an automated process that runs on a daily, weekly or monthly basis to continuously update your this project, we will use ParseHub a free and powerful web scraping that can work with any website. Make sure to download and install ParseHub for free before getting raping Amazon Product DataFor this example, we will scrape product data from ’s results page for “computer monitor”. We will extract information available both on the results page and information available on each of the product tting StartedFirst, make sure to download and install ParseHub. We will use this web scraper for this ParseHub, click on “New Project” and use the URL from Amazon’s result page. The page will now be rendered inside the raping Amazon Results PageOnce the site is rendered, click on the product name of the first result on the page. In this case, we will ignore the sponsored listings. The name you’ve clicked will become green to indicate that it’s been rest of the product names will be highlighted in yellow. Click on the second one on the list. Now all of the items will be highlighted in green.

On the left sidebar, rename your selection to product. You will notice that ParseHub is now extracting the product name and URL for each product.

On the left sidebar, click the PLUS(+) sign next to the product selection and choose the Relative Select command.

Using the Relative Select command, click on the first product name on the page and then on its listing price. You will see an arrow connect the two selections.

Expand the new command you’ve created and then delete the URL that is also being extracted by default.

Repeat steps 4 through 6 to also extract the product star rating, the number of reviews and product image. Make sure to rename your new selections Tip: The method above will only extract the image URL for each product. Want to download the actual image file from the site? Read our guide on how to scrape and download images with have now selected all the data we wanted to scrape from the results page. Your project should now look like this:Scraping Amazon Product PageNow, we will tell ParseHub to click on each of the products we’ve selected and extract additional data from each page. In this case, we will extract the product ASIN, Screen Size and Screen, on the left sidebar, click on the 3 dots next to the main_template your template to search_results_page. Templates help ParseHub keep different page layouts separate.

Now use the PLUS(+) button next to the product selection and choose the “Click” command. A pop-up will appear asking you if this link is a “next page” button. Click “No” and next to Create New Template input a new template name, in this case, we will use product_page.

ParseHub will now automatically create this new template and render the Amazon product page for the first product on the list.

Scroll down the “Product Information” part of the page and using the Select command, click on the first element of the list. In this case, it will be the Screen Size item.

Like we have done before, keep on selecting the items until they all turn green. Rename this selection to labels.

Expand the labels selection and remove the begin new entry in labels command.

Now click the PLUS(+) sign next to the labels selection and use the Conditional command. This will allow us to only pull some of the info from these items.

For our first Conditional command, we will use the following expression:

$(“Screen Size”)

We will then use the PLUS(+) sign next to our conditional command to add a Relative Select command. We will now use this Relative Select command to first click on the Screen Size text and then on the actual measurement next to it (in this case, 21. 5 inches).

Now ParseHub will extract the product’s screen size into its own column. We can copy-paste the conditional command we just created to pull other information. Just make sure to edit the conditional expression. For example, the ASIN expression will be:$(“ASIN”)

Lastly, make sure that your conditional selections are aligned properly so they are not nested amongst themselves. You can drag and drop the selections to fix this. The final template should look like this:Want to scrape reviews as well? Check our guide on how to Scrape Amazon reviews using a free web, you might want to scrape several pages worth of data for this project. So far, we are only scraping page 1 of the search results. Let’s setup ParseHub to navigate to the next 10 results the left sidebar, return to the search_results_page template. You might also need to change the browser tab to the search results page as on the PLUS(+) sign next to the page selection and choose the Select command.

Then select the Next page link at the bottom of the Amazon page. Rename the selection to next_button.

By default, ParseHub will extract the text and URL from this link, so expand your new next_button selection and remove these 2 commands.

Now, click on the PLUS(+) sign of your next_button selection and use the Click command.

A pop-up will appear asking if this is a “Next” link. Click Yes and enter the number of pages you’d like to navigate to. In this case, we will scrape 9 additional pages. Running and Exporting your ProjectNow that we are done setting up the project, it’s time to run our scrape the left sidebar, click on the “Get Data” button and click on the “Run” button to run your scrape. For longer projects, we recommend doing a Test Run to verify that your data will be formatted the scrape job is completed, you will now be able to download all the information you’ve requested as a handy spreadsheet or as a JSON ThoughtsAnd that’s it! You are now ready to scrape Amazon data to your heart’s why stop there? With the skills you’ve just learned, you could scrape almost any other out our guides you may be interested in:How to scrape data from Yellow Pages How to scrape data from to use a data extraction tool to scrape AutoTraderScraping Rakuten dataBetter yet, become a certified Web Scraping expert with our free courses! Enroll for free today and get your certificates! Download ParseHub for freeThis post was originally published on August 29th, 2019 and last updated on November 9th, 2020.

5 Major Challenges That Make Amazon Data Scraping Painful

Amazon has been on the cutting edge of collecting, storing, and analyzing a large amount of data. Be it customer data, product information, data about retailers, or even information on the general market trends. Since Amazon is one of the largest e-commerce websites, a lot of analysts and firms depend on the data extracted from here to derive actionable growing e-commerce industry demands sophisticated analytical techniques to predict market trends, study customer temperament, or even get a competitive edge over the myriad of players in this sector. To augment the strength of these analytical techniques, you need high-quality reliable data. This data is called alternative data and can be derived from multiple sources. Some of the most prominent sources of alternative data in the e-commerce industry are customer reviews, product information, and even geographical data. E-commerce websites are a great source for a lot of these data elements. It is no news that Amazon has been at the forefront of the e-commerce industry, for quite some time now. Retailers fight tooth and nail to scrape data from Amazon. However, Amazon data scraping is not easy! Let us go through a few issues you may face while scraping data from is Amazon Data Scraping Challenging? Before you start Amazon data scraping, you should know that the website discourages scraping in its policy and page-structure. Due to its vested interest in protecting its data, Amazon has basic anti-scraping measures put in place. This might stop your scraper from extracting all the information you need. Besides that, the structure of the page might or might not differ for various products. This might fail your scraper code and logic. The worst part is, you might not even foresee this issue springing up and might even run into some network errors and unknown responses. Furthermore, captcha issues and IP (Internet Protocol) blocks might be a regular roadblock. You will feel the need to have a database and the lack of one might be a huge issue! You will also need to take care of exceptions while writing the algorithm for your scraper. This will come in handy if you are trying to circumvent issues due to complex page structures, unconventional (non-ASCII) characters, and other issues like funny URLs and huge memory requirements. Let us talk about a few of these issues in detail. We shall also cover how to solve them. Hopefully, this will help you scrape data from Amazon successfully. 1. Amazon can detect Bots and block their IPsSince Amazon prevents web scraping on its pages, it can easily detect if an action is being executed by a scraper bot or through a browser by a manual agent. A lot of these trends are identified by closely monitoring the behavior of the browsing agent. For example, if your URLs are repeatedly changed by only a query parameter at a regular interval, this is a clear indication of a scraper running through the page. It thus uses captchas and IP bans to block such bots. While this step is necessary to protect the privacy and integrity of the information, one might still need to extract some data from the Amazon web page. To do so, we have some workarounds for the same. Let us look at some of these:Rotate the IPs through different proxy servers if you need to. You can also deploy a consumer-grade VPN service with IP rotation random time-gaps and pauses in your scraper code to break the regularity of page the query parameters from the URLs to remove identifiers linking requests the scraper headers to make it look like the requests are coming from a browser and not a piece of code. 2. A lot of product pages on Amazon have varying page structuresIf you have ever attempted to scrape product descriptions and scrape data from Amazon, you might have run into a lot of unknown response errors and exceptions. This is because most of your scrapers are designed and customized for a particular structure of a page. It is used to follow a particular page structure, extract the HTML information of the same, and then collect the relevant data. However, if this structure of the page changes, the scraper might fail if it is not designed to handle exceptions. A lot of products on Amazon have different pages and the attributes of these pages differ from a standard template. This is often done to cater to different types of products that may have different key attributes and features that need to be highlighted. To address these inconsistencies, write the code so as to handle exceptions. Furthermore, your code should be resilient. You can do this by including ‘try-catch’ phrases that ensure that the code does not fail at the first occurrence of a network error or a time-out error. Since you will be scraping some particular attributes of a product, you can design the code so that the scraper can look for that particular attribute using tools like ‘string matching’. You can do so after extracting the complete HTML structure of the target page. Also Read: Competitive Pricing Analysis: Hitting the Bullseye in Profit Generation3. Your scraper might not be efficient enough! Ever got a scraper that has been running for hours to get you some hundred thousands of rows? This might be because you haven’t taken care of the efficiency and speed of the algorithm. You can do some basic math while designing the algorithm. Let us see what you can do to solve this problem! You will always have the number of products or sellers you need to extract information about. Using this data, you can roughly calculate the number of requests you need to send every second to complete your data scraping exercise. Once you compute this, your aim is to design your scraper to meet this condition! It is highly likely that single-threaded, network blocking operations will fail if you want to speed things up! Probably, you would want to create multi-threaded scrapers! This allows your CPU to work in a parallel fashion! It will be working on one response or another, even when each request is taking several seconds to complete. This might be able to give you almost 100x the speed of your original single-threaded scraper! you will need an efficient scraper to crawl through Amazon as there is a lot of information on the site! 4. You might need a cloud platform and other computational aids! A very high-performance machine will be able to speed the process up for you! You can thus avoid burning the resources of your local system! To be able to scrape a website like Amazon, you might need high capacity memory resources! You will also need network pipes and cores with high efficiency! A cloud-based platform should be able to provide these resources to you! You do not want to run into memory issues! If you store big lists or dictionaries in memory, you might put an extra burden on your machine-resources! We advise you to transfer your data to permanent storage places as soon as possible. This will also help you speed the process is an array of cloud services that you can use for reasonable prices. You can avail one of these services using simple steps. It will also help you avoid unnecessary system crashes and delays in the process. 5. Use a database for recording informationIf you scrape data from Amazon or any other retail website, you will be collecting high volumes of data. Since the process of scraping consumes power and time, we advise you to keep storing this data in a database. Store each product or sellers’ record that you crawl as a row in a database table. You can also use databases to perform operations like basic querying, exporting, and deduping on your data. This makes the process of storing, analyzing, and reusing your data convenient and faster! Also Read: How Scraping Amazon Data can help you price your products rightSummaryA lot of businesses and analysts, especially in the retail and e-commerce sector need Amazon data scraping. They use this data to make prices comparison, studying market trends across demographics, forecasting product sales, reviewing customer sentiment, or even estimating competition rates. This can be a repetitive exercise. If you create your own scraper, it can be a time-consuming, challenging ever, Datahut can scrape e-commerce product information for you from a wide range of web sources and provide this data in readable file formats like ‘CSV’ or other database locations as per client needs. You can then use this data for all your subsequent analyses. This will help you save resources and time. We advise you to conduct thorough research on the various data scraping services in the market. You may then avail the service that suits your requirements the wnload Amazon Data sampleWish to know more about how Datahut can help in your e-commerce data scraping needs? Contact us today. #datascraping #amazon #amazonscraping #ecommerce #issuewithscraping #retail

Is Web Scraping Illegal? Depends on What the Meaning of the Word Is

Depending on who you ask, web scraping can be loved or hated.

Web scraping has existed for a long time and, in its good form, it’s a key underpinning of the internet. “Good bots” enable, for example, search engines to index web content, price comparison services to save consumers money, and market researchers to gauge sentiment on social media.

“Bad bots, ” however, fetch content from a website with the intent of using it for purposes outside the site owner’s control. Bad bots make up 20 percent of all web traffic and are used to conduct a variety of harmful activities, such as denial of service attacks, competitive data mining, online fraud, account hijacking, data theft, stealing of intellectual property, unauthorized vulnerability scans, spam and digital ad fraud.

So, is it Illegal to Scrape a Website?

So is it legal or illegal? Web scraping and crawling aren’t illegal by themselves. After all, you could scrape or crawl your own website, without a hitch.

Startups love it because it’s a cheap and powerful way to gather data without the need for partnerships. Big companies use web scrapers for their own gain but also don’t want others to use bots against them.

The general opinion on the matter does not seem to matter anymore because in the past 12 months it has become very clear that the federal court system is cracking down more than ever.

Let’s take a look back. Web scraping started in a legal grey area where the use of bots to scrape a website was simply a nuisance. Not much could be done about the practice until in 2000 eBay filed a preliminary injunction against Bidder’s Edge. In the injunction eBay claimed that the use of bots on the site, against the will of the company violated Trespass to Chattels law.

The court granted the injunction because users had to opt in and agree to the terms of service on the site and that a large number of bots could be disruptive to eBay’s computer systems. The lawsuit was settled out of court so it all never came to a head but the legal precedent was set.

In 2001 however, a travel agency sued a competitor who had “scraped” its prices from its Web site to help the rival set its own prices. The judge ruled that the fact that this scraping was not welcomed by the site’s owner was not sufficient to make it “unauthorized access” for the purpose of federal hacking laws.

Two years later the legal standing for eBay v Bidder’s Edge was implicitly overruled in the “Intel v. Hamidi”, a case interpreting California’s common law trespass to chattels. It was the wild west once again. Over the next several years the courts ruled time and time again that simply putting “do not scrape us” in your website terms of service was not enough to warrant a legally binding agreement. For you to enforce that term, a user must explicitly agree or consent to the terms. This left the field wide open for scrapers to do as they wish.

Fast forward a few years and you start seeing a shift in opinion. In 2009 Facebook won one of the first copyright suits against a web scraper. This laid the groundwork for numerous lawsuits that tie any web scraping with a direct copyright violation and very clear monetary damages. The most recent case being AP v Meltwater where the courts stripped what is referred to as fair use on the internet.

Previously, for academic, personal, or information aggregation people could rely on fair use and use web scrapers. The court now gutted the fair use clause that companies had used to defend web scraping. The court determined that even small percentages, sometimes as little as 4. 5% of the content, are significant enough to not fall under fair use. The only caveat the court made was based on the simple fact that this data was available for purchase. Had it not been, it is unclear how they would have ruled. Then a few months back the gauntlet was dropped.

Andrew Auernheimer was convicted of hacking based on the act of web scraping. Although the data was unprotected and publically available via AT&T’s website, the fact that he wrote web scrapers to harvest that data in mass amounted to “brute force attack”. He did not have to consent to terms of service to deploy his bots and conduct the web scraping. The data was not available for purchase. It wasn’t behind a login. He did not even financially gain from the aggregation of the data. Most importantly, it was buggy programing by AT&T that exposed this information in the first place. Yet Andrew was at fault. This isn’t just a civil suit anymore. This charge is a felony violation that is on par with hacking or denial of service attacks and carries up to a 15-year sentence for each charge.

In 2016, Congress passed its first legislation specifically to target bad bots — the Better Online Ticket Sales (BOTS) Act, which bans the use of software that circumvents security measures on ticket seller websites. Automated ticket scalping bots use several techniques to do their dirty work including web scraping that incorporates advanced business logic to identify scalping opportunities, input purchase details into shopping carts, and even resell inventory on secondary markets.

To counteract this type of activity, the BOTS Act:

Prohibits the circumvention of a security measure used to enforce ticket purchasing limits for an event with an attendance capacity of greater than 200 persons.

Prohibits the sale of an event ticket obtained through such a circumvention violation if the seller participated in, had the ability to control, or should have known about it.

Treats violations as unfair or deceptive acts under the Federal Trade Commission Act. The bill provides authority to the FTC and states to enforce against such violations.

In other words, if you’re a venue, organization or ticketing software platform, it is still on you to defend against this fraudulent activity during your major onsales.

The UK seems to have followed the US with its Digital Economy Act 2017 which achieved Royal Assent in April. The Act seeks to protect consumers in a number of ways in an increasingly digital society, including by “cracking down on ticket touts by making it a criminal offence for those that misuse bot technology to sweep up tickets and sell them at inflated prices in the secondary market. ”

In the summer of 2017, LinkedIn sued hiQ Labs, a San Francisco-based startup. hiQ was scraping publicly available LinkedIn profiles to offer clients, according to its website, “a crystal ball that helps you determine skills gaps or turnover risks months ahead of time. ”

You might find it unsettling to think that your public LinkedIn profile could be used against you by your employer.

Yet a judge on Aug. 14, 2017 decided this is okay. Judge Edward Chen of the U. S. District Court in San Francisco agreed with hiQ’s claim in a lawsuit that Microsoft-owned LinkedIn violated antitrust laws when it blocked the startup from accessing such data. He ordered LinkedIn to remove the barriers within 24 hours. LinkedIn has filed to appeal.

The ruling contradicts previous decisions clamping down on web scraping. And it opens a Pandora’s box of questions about social media user privacy and the right of businesses to protect themselves from data hijacking.

There’s also the matter of fairness. LinkedIn spent years creating something of real value. Why should it have to hand it over to the likes of hiQ — paying for the servers and bandwidth to host all that bot traffic on top of their own human users, just so hiQ can ride LinkedIn’s coattails?

I am in the business of blocking bots. Chen’s ruling has sent a chill through those of us in the cybersecurity industry devoted to fighting web-scraping bots.

I think there is a legitimate need for some companies to be able to prevent unwanted web scrapers from accessing their site.

In October of 2017, and as reported by Bloomberg, Ticketmaster sued Prestige Entertainment, claiming it used computer programs to illegally buy as many as 40 percent of the available seats for performances of “Hamilton” in New York and the majority of the tickets Ticketmaster had available for the Mayweather v. Pacquiao fight in Las Vegas two years ago.

Prestige continued to use the illegal bots even after it paid a $3. 35 million to settle New York Attorney General Eric Schneiderman’s probe into the ticket resale industry.

Under that deal, Prestige promised to abstain from using bots, Ticketmaster said in the complaint. Ticketmaster asked for unspecified compensatory and punitive damages and a court order to stop Prestige from using bots.

Are the existing laws too antiquated to deal with the problem? Should new legislation be introduced to provide more clarity? Most sites don’t have any web scraping protections in place. Do the companies have some burden to prevent web scraping?

As the courts try to further decide the legality of scraping, companies are still having their data stolen and the business logic of their websites abused. Instead of looking to the law to eventually solve this technology problem, it’s time to start solving it with anti-bot and anti-scraping technology today.

Get the latest from imperva

The latest news from our experts in the fast-changing world of application, data, and edge security.

Subscribe to our blog

Frequently Asked Questions about web scraper for amazon

Is Web scraping allowed on Amazon?

Since Amazon prevents web scraping on its pages, it can easily detect if an action is being executed by a scraper bot or through a browser by a manual agent. … It thus uses captchas and IP bans to block such bots.Oct 27, 2020

Is Web scraping legal?

So is it legal or illegal? Web scraping and crawling aren’t illegal by themselves. After all, you could scrape or crawl your own website, without a hitch. … Big companies use web scrapers for their own gain but also don’t want others to use bots against them.

How can I scrape data from Amazon?

Right-click and scrape Go to the Amazon website and search. When you are on the search page with results you want to scrape from, right-click and choose the “Scrap Asin From This Page” option. Information will be extracted and save as a CSV file.Aug 9, 2021