4 Tools for Web Scraping in Node.js – Twilio

Sometimes the data you need is available online, but not through a dedicated REST API. Luckily for JavaScript developers, there are a variety of tools available in for scraping and parsing data directly from websites to use in your projects and applications.

Let’s walk through 4 of these libraries to see how they work and how they compare to each other.

Make sure you have up to date versions of (at least 12. 0. 0) and npm installed on your machine. Run the terminal command in the directory where you want your code to live:

For some of these applications, we’ll be using the Got library for making HTTP requests, so install that with this command in the same directory:

Let’s try finding all of the links to unique MIDI files on this web page from the Video Game Music Archive with a bunch of Nintendo music as the example problem we want to solve for each of these libraries.

Tips and tricks for web scraping

Before moving onto specific tools, there are some common themes that are going to be useful no matter which method you decide to use.

Before writing code to parse the content you want, you typically will need to take a look at the HTML that’s rendered by the browser. Every web page is different, and sometimes getting the right data out of them requires a bit of creativity, pattern recognition, and experimentation.

There are helpful developer tools available to you in most modern browsers. If you right-click on the element you’re interested in, you can inspect the HTML behind that element to get more insight.

You will also frequently need to filter for specific content. This is often done using CSS selectors, which you will see throughout the code examples in this tutorial, to gather HTML elements that fit a specific criteria. Regular expressions are also very useful in many web scraping situations. On top of that, if you need a little more granularity, you can write functions to filter through the content of elements, such as this one for determining whether a hyperlink tag refers to a MIDI file:

const isMidi = (link) => {

// Return false if there is no href attribute.

if(typeof === ‘undefined’) { return false}

return (”);};

It is also good to keep in mind that many websites prevent web scraping in their Terms of Service, so always remember to double check this beforehand. With that, let’s dive into the specifics!

jsdom

jsdom is a pure-JavaScript implementation of many web standards for, and is a great tool for testing and scraping web applications. Install it in your terminal using the following command:

The following code is all you need to gather all of the links to MIDI files on the Video Game Music Archive page referenced earlier:

const got = require(‘got’);

const jsdom = require(“jsdom”);

const { JSDOM} = jsdom;

const vgmUrl= ”;

const noParens = (link) => {

// Regular expression to determine if the text has parentheses.

const parensRegex = /^((?! \(). )*$/;

return (link. textContent);};

(async () => {

const response = await got(vgmUrl);

const dom = new JSDOM();

// Create an Array out of the HTML Elements for filtering using spread syntax.

const nodeList = [(‘a’)];

(isMidi)(noParens). forEach(link => {

();});})();

This uses a very simple query selector, a, to access all hyperlinks on the page, along with a few functions to filter through this content to make sure we’re only getting the MIDI files we want. The noParens() filter function uses a regular expression to leave out all of the MIDI files that contain parentheses, which means they are just alternate versions of the same song.

Save that code to a file named, and run it with the command node in your terminal.

If you want a more in-depth walkthrough on this library, check out this other tutorial I wrote on using jsdom.

Cheerio

Cheerio is a library that is similar to jsdom but was designed to be more lightweight, making it much faster. It implements a subset of core jQuery, providing an API that many JavaScript developers are familiar with.

Install it with the following command:

npm install cheerio@1. 0-rc. 3

The code we need to accomplish this same task is very similar:

const cheerio = require(‘cheerio’);

const isMidi = (i, link) => {

const noParens = (i, link) => {

return (ildren[0]);};

const $ = ();

$(‘a’)(isMidi)(noParens)((i, link) => {

const href =;

(href);});})();

Here you can see that using functions to filter through content is built into Cheerio’s API, so we don’t need any extra code for converting the collection of elements to an array. Replace the code in with this new code, and run it again. The execution should be noticeably quicker because Cheerio is a less bulky library.

If you want a more in-depth walkthrough, check out this other tutorial I wrote on using Cheerio.

Puppeteer

Puppeteer is much different than the previous two in that it is primarily a library for headless browser scripting. Puppeteer provides a high-level API to control Chrome or Chromium over the DevTools protocol. It’s much more versatile because you can write code to interact with and manipulate web applications rather than just reading static data.

npm install puppeteer@5. 5. 0

Web scraping with Puppeteer is much different than the previous two tools because rather than writing code to grab raw HTML from a URL and then feeding it to an object, you’re writing code that is going to run in the context of a browser processing the HTML of a given URL and building a real document object model out of it.

The following code snippet instructs Puppeteer’s browser to go to the URL we want and access all of the same hyperlink elements that we parsed for previously:

const puppeteer = require(‘puppeteer’);

const vgmUrl = ”;

const browser = await ();

const page = await wPage();

await (vgmUrl);

const links = await page. $$eval(‘a’, elements => (element => {

return (”) && (element. textContent);})(element =>));

rEach(link => (link));

await ();})();

Notice that we are still writing some logic to filter through the links on the page, but instead of declaring more filter functions, we’re just doing it inline. There is some boilerplate code involved for telling the browser what to do, but we don’t have to use another Node module for making a request to the website we’re trying to scrape. Overall it’s a lot slower if you’re doing simple things like this, but Puppeteer is very useful if you are dealing with pages that aren’t static.

For a more thorough guide on how to use more of Puppeteer’s features to interact with dynamic web applications, I wrote another tutorial that goes deeper into working with Puppeteer.

Playwright

Playwright is another library for headless browser scripting, written by the same team that built Puppeteer. It’s API and functionality are nearly identical to Puppeteer’s, but it was designed to be cross-browser and works with FireFox and Webkit as well as Chrome/Chromium.

npm install playwright@0. 13. 0

The code for doing this task using Playwright is largely the same, with the exception that we need to explicitly declare which browser we’re using:

const playwright = require(‘playwright’);

This code should do the same thing as the code in the Puppeteer section and should behave similarly. The advantage to using Playwright is that it is more versatile as it works with more than just one type of browser. Try running this code using the other browsers and seeing how it affects the behavior of your script.

Like the other libraries, I also wrote another tutorial that goes deeper into working with Playwright if you want a longer walkthrough.

The vast expanse of the World Wide Web

Now that you can programmatically grab things from web pages, you have access to a huge source of data for whatever your projects need. One thing to keep in mind is that changes to a web page’s HTML might break your code, so make sure to keep everything up to date if you’re building applications that rely on scraping.

I’m looking forward to seeing what you build. Feel free to reach out and share your experiences or ask any questions.

Email:

Twitter: @Sagnewshreds

Github: Sagnew

Twitch (streaming live code): Sagnewshreds

4 Tools for Web Scraping in Node.js – Twilio

Sometimes the data you need is available online, but not through a dedicated REST API. Luckily for JavaScript developers, there are a variety of tools available in for scraping and parsing data directly from websites to use in your projects and applications.

Let’s walk through 4 of these libraries to see how they work and how they compare to each other.

Make sure you have up to date versions of (at least 12. 0. 0) and npm installed on your machine. Run the terminal command in the directory where you want your code to live:

For some of these applications, we’ll be using the Got library for making HTTP requests, so install that with this command in the same directory:

Let’s try finding all of the links to unique MIDI files on this web page from the Video Game Music Archive with a bunch of Nintendo music as the example problem we want to solve for each of these libraries.

Tips and tricks for web scraping

Before moving onto specific tools, there are some common themes that are going to be useful no matter which method you decide to use.

Before writing code to parse the content you want, you typically will need to take a look at the HTML that’s rendered by the browser. Every web page is different, and sometimes getting the right data out of them requires a bit of creativity, pattern recognition, and experimentation.

There are helpful developer tools available to you in most modern browsers. If you right-click on the element you’re interested in, you can inspect the HTML behind that element to get more insight.

You will also frequently need to filter for specific content. This is often done using CSS selectors, which you will see throughout the code examples in this tutorial, to gather HTML elements that fit a specific criteria. Regular expressions are also very useful in many web scraping situations. On top of that, if you need a little more granularity, you can write functions to filter through the content of elements, such as this one for determining whether a hyperlink tag refers to a MIDI file:

const isMidi = (link) => {

// Return false if there is no href attribute.

if(typeof === ‘undefined’) { return false}

return (”);};

It is also good to keep in mind that many websites prevent web scraping in their Terms of Service, so always remember to double check this beforehand. With that, let’s dive into the specifics!

jsdom

jsdom is a pure-JavaScript implementation of many web standards for, and is a great tool for testing and scraping web applications. Install it in your terminal using the following command:

The following code is all you need to gather all of the links to MIDI files on the Video Game Music Archive page referenced earlier:

const got = require(‘got’);

const jsdom = require(“jsdom”);

const { JSDOM} = jsdom;

const vgmUrl= ”;

const noParens = (link) => {

// Regular expression to determine if the text has parentheses.

const parensRegex = /^((?! \(). )*$/;

return (link. textContent);};

(async () => {

const response = await got(vgmUrl);

const dom = new JSDOM();

// Create an Array out of the HTML Elements for filtering using spread syntax.

const nodeList = [(‘a’)];

(isMidi)(noParens). forEach(link => {

();});})();

This uses a very simple query selector, a, to access all hyperlinks on the page, along with a few functions to filter through this content to make sure we’re only getting the MIDI files we want. The noParens() filter function uses a regular expression to leave out all of the MIDI files that contain parentheses, which means they are just alternate versions of the same song.

Save that code to a file named, and run it with the command node in your terminal.

If you want a more in-depth walkthrough on this library, check out this other tutorial I wrote on using jsdom.

Cheerio

Cheerio is a library that is similar to jsdom but was designed to be more lightweight, making it much faster. It implements a subset of core jQuery, providing an API that many JavaScript developers are familiar with.

Install it with the following command:

npm install cheerio@1. 0-rc. 3

The code we need to accomplish this same task is very similar:

const cheerio = require(‘cheerio’);

const isMidi = (i, link) => {

const noParens = (i, link) => {

return (ildren[0]);};

const $ = ();

$(‘a’)(isMidi)(noParens)((i, link) => {

const href =;

(href);});})();

Here you can see that using functions to filter through content is built into Cheerio’s API, so we don’t need any extra code for converting the collection of elements to an array. Replace the code in with this new code, and run it again. The execution should be noticeably quicker because Cheerio is a less bulky library.

If you want a more in-depth walkthrough, check out this other tutorial I wrote on using Cheerio.

Puppeteer

Puppeteer is much different than the previous two in that it is primarily a library for headless browser scripting. Puppeteer provides a high-level API to control Chrome or Chromium over the DevTools protocol. It’s much more versatile because you can write code to interact with and manipulate web applications rather than just reading static data.

npm install puppeteer@5. 5. 0

Web scraping with Puppeteer is much different than the previous two tools because rather than writing code to grab raw HTML from a URL and then feeding it to an object, you’re writing code that is going to run in the context of a browser processing the HTML of a given URL and building a real document object model out of it.

The following code snippet instructs Puppeteer’s browser to go to the URL we want and access all of the same hyperlink elements that we parsed for previously:

const puppeteer = require(‘puppeteer’);

const vgmUrl = ”;

const browser = await ();

const page = await wPage();

await (vgmUrl);

const links = await page. $$eval(‘a’, elements => (element => {

return (”) && (element. textContent);})(element =>));

rEach(link => (link));

await ();})();

Notice that we are still writing some logic to filter through the links on the page, but instead of declaring more filter functions, we’re just doing it inline. There is some boilerplate code involved for telling the browser what to do, but we don’t have to use another Node module for making a request to the website we’re trying to scrape. Overall it’s a lot slower if you’re doing simple things like this, but Puppeteer is very useful if you are dealing with pages that aren’t static.

For a more thorough guide on how to use more of Puppeteer’s features to interact with dynamic web applications, I wrote another tutorial that goes deeper into working with Puppeteer.

Playwright

Playwright is another library for headless browser scripting, written by the same team that built Puppeteer. It’s API and functionality are nearly identical to Puppeteer’s, but it was designed to be cross-browser and works with FireFox and Webkit as well as Chrome/Chromium.

npm install playwright@0. 13. 0

The code for doing this task using Playwright is largely the same, with the exception that we need to explicitly declare which browser we’re using:

const playwright = require(‘playwright’);

This code should do the same thing as the code in the Puppeteer section and should behave similarly. The advantage to using Playwright is that it is more versatile as it works with more than just one type of browser. Try running this code using the other browsers and seeing how it affects the behavior of your script.

Like the other libraries, I also wrote another tutorial that goes deeper into working with Playwright if you want a longer walkthrough.

The vast expanse of the World Wide Web

Now that you can programmatically grab things from web pages, you have access to a huge source of data for whatever your projects need. One thing to keep in mind is that changes to a web page’s HTML might break your code, so make sure to keep everything up to date if you’re building applications that rely on scraping.

I’m looking forward to seeing what you build. Feel free to reach out and share your experiences or ask any questions.

Email:

Twitter: @Sagnewshreds

Github: Sagnew

Twitch (streaming live code): Sagnewshreds

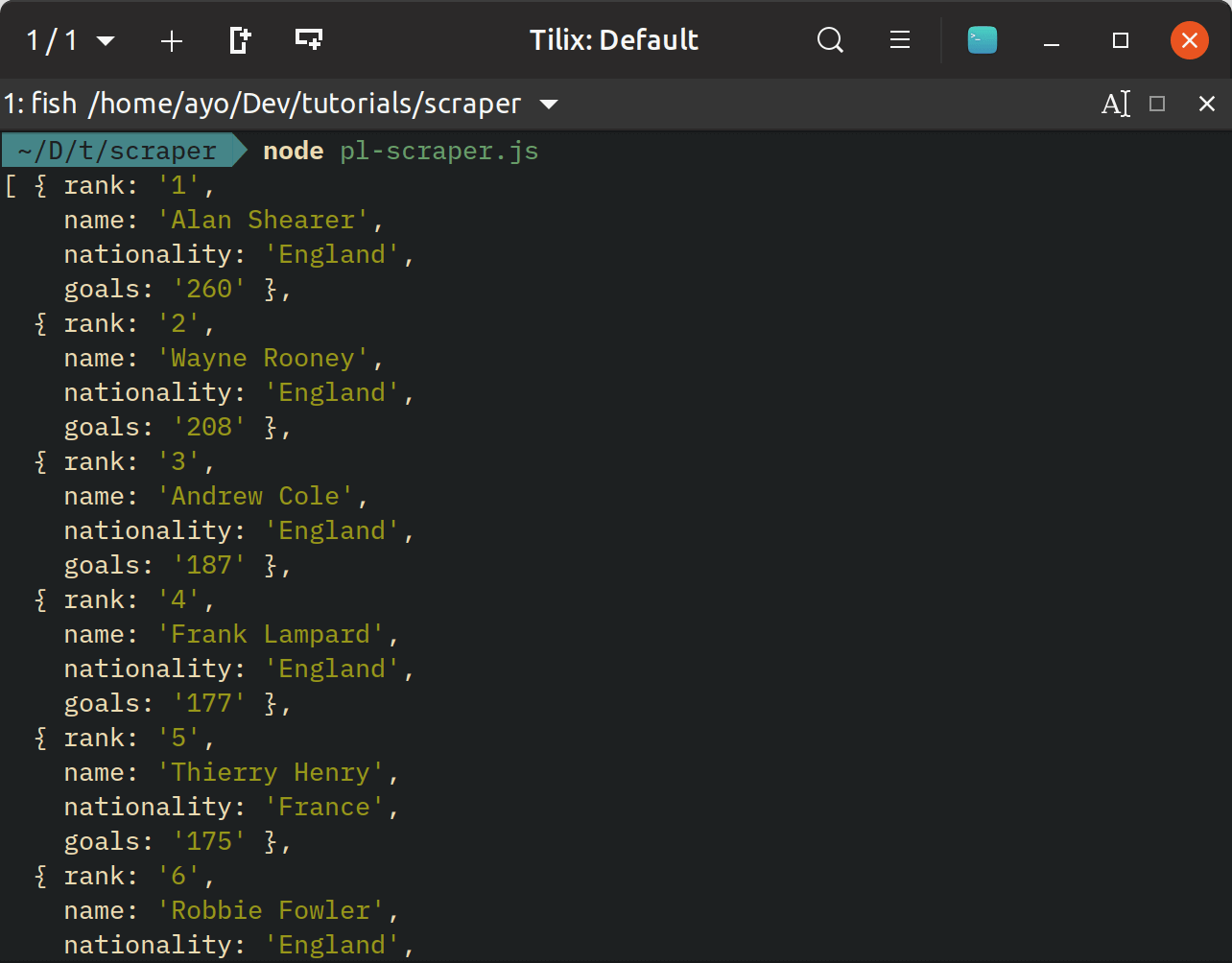

How To Scrape a Website with Node.js | DigitalOcean

Introduction

Web scraping is the technique of extracting data from websites. This data can further be stored in a database or any other storage system for analysis or other uses. While extracting data from websites can be done manually, web scraping usually refers to an automated process.

Web scraping is used by most bots and web crawlers for data extraction. There are various methodologies and tools you can use for web scraping, and in this tutorial, we will be focusing on using a technique that involves DOM parsing a webpage.

Prerequisites

Web scraping can be done in virtually any programming language that has support for HTTP and XML or DOM parsing. In this tutorial, we will focus on web scraping using JavaScript in a server environment.

With that in mind, this tutorial assumes that readers know the following:

Understanding of JavaScript and ES6 and ES7 syntax

Familiarity with jQuery

Functional programming concepts

Next, we will go through what our end project will be.

Project Specs

We will be using web scraping to extract some data from the Scotch website. Scotch does not provide an API for fetching the profiles and tutorials/posts of authors. So, we will be building an API for fetching the profiles and tutorials/posts of Scotch authors.

Here is a screenshot of a demo app created based on the API we will be built in this tutorial.

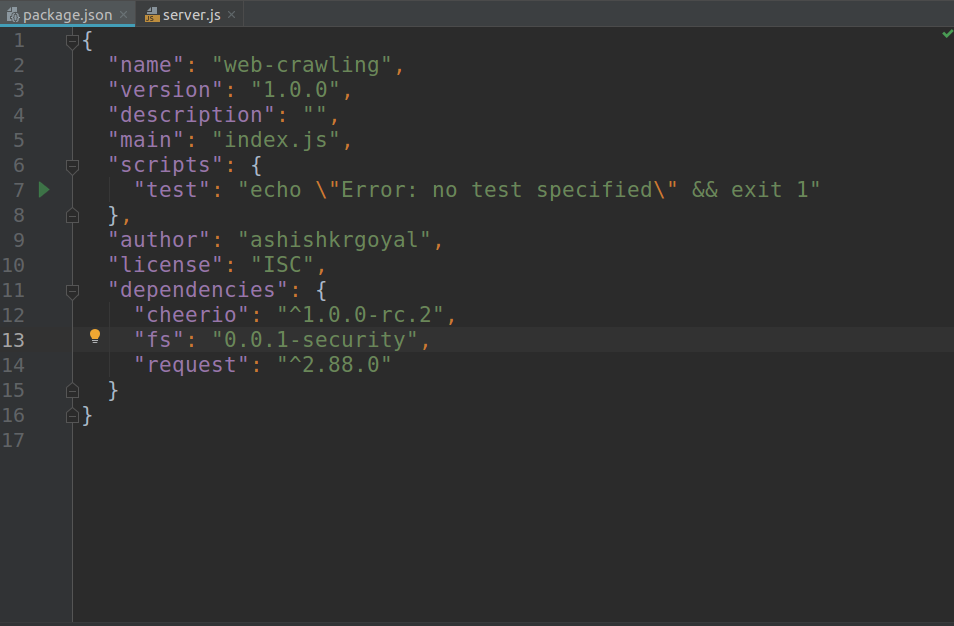

Before we begin, let’s go over the packages and dependencies you will need to complete this project.

Project Setup

Before you begin, ensure that you have Node and npm or yarn installed on your machine. Since we will use a lot of ES6/7 syntax in this tutorial, it is recommended that you use the following versions of Node and npm for complete ES6/7 support: Node 8. 9. 0 or higher and npm 5. 2. 0 or higher.

We will be using the following core packages:

Cheerio – Cheerio is a fast, flexible, and lean implementation of core jQuery designed specifically for the server. It makes DOM parsing very easy.

Axios – Axios is a promise based HTTP client for the browser and It will enable us fetch page contents through HTTP requests.

Express – Express is a minimal and flexible web application framework that provides a robust set of features for web and mobile applications.

Lodash – Lodash is a modern JavaScript utility library delivering modularity, performance & extras. It makes JavaScript easier by taking the hassle out of working with arrays, numbers, objects, strings, etc.

Step 1 — Create the Application Directory

Create a new directory for the application and run the following command to install the required dependencies for the app.

mkdir scotch-scraping

Change to the new directory:

cd scotch-scraping

Initiate a new package:

npm init -y

And install app dependencies:

npm install express morgan axios cheerio lodash

Step 2 — Set Up the Express Server Application

We will go ahead to set up an HTTP server application using Express. Create a file in the root directory of your application and add the following code snippet to set up the server:

Require dependencies

const logger = require(‘morgan’);

const express = require(‘express’);

// Create an Express application

const app = express();

// Configure the app port

const port = || 3000;

(‘port’, port);

// Load middlewares

(logger(‘dev’));

// Start the server and listen on the preconfigured port

(port, () => (`App started on port ${port}. `));

Step 3 — Modify npm scripts

Finally, we will modify the “scripts” section of the file to look like the following snippet:

“scripts”: {

“start”: “node “}

We have gotten all we need to start building our application. If you run the command npm start in your terminal now, it will start up the application server on port 3000 if it is available. However, we cannot access any route yet since we are yet to add routes to our application. Let’s start building some helper functions we will need for web scraping.

Step 4 — Create Helper Functions

As stated earlier, we will create a couple of helper functions that will be used in several parts of our application. Create a new app directory in your project root. Create a new file named in the just created directory and add the following content to it:

app/helpers. jsconst _ = require(‘lodash’);

const axios = require(“axios”);

const cheerio = require(“cheerio”);

In this code, we are requiring the dependencies we will need for our helper functions. Let’s go ahead and add the helper functions.

Creating Utility Helper Functions

We will start by creating some utility helper functions. Add the following snippet to the app/ file.

app/

// UTILITY FUNCTIONS

///////////////////////////////////////////////////////////////////////////////

/*

* Compose function arguments starting from right to left

* to an overall function and returns the overall function

*/

const compose = (.. ) => arg => {

return **_. flattenDeep(fns). reduceRight((current, fn) => {

if (_**. isFunction(fn)) return fn(current);

throw new TypeError(“compose() expects only functions as parameters. “);}, arg);};

* Compose async function arguments starting from right to left

* to an overall async function and returns the overall async function

const composeAsync = (.. ) => arg => {

return. reduceRight(async (current, fn) => {

if (. isFunction(fn)) return fn(await current);

* Enforces the scheme of the URL is

* and returns the new URL

const enforceHttpsUrl = url =>

String(url)? place(/^(? :)? \/\//, “”): null;

* Strips number of all non-numeric characters

* and returns the sanitized number

const sanitizeNumber = number =>

String(number)? place(/[^0-9-. ]/g, “”): Number(number)? number: null;

* Filters null values from array

* and returns an array without nulls

const withoutNulls = arr =>

Array(arr)? (val =>! (val)): _[_];

* Transforms an array of ({ key: value}) pairs to an object

* and returns the transformed object

const arrayPairsToObject = arr =>

((obj, pair) => ({..,.. }), {});

* A composed function that removes null values from array of ({ key: value}) pairs

* and returns the transformed object of the array

const fromPairsToObject = compose(arrayPairsToObject, withoutNulls);

Let’s go through the functions one at a time to understand what they do.

compose() – This is a higher-order function that takes one or more functions as its arguments and returns a composed function. The composed function has the same effect as invoking the functions passed in as arguments from right to left, passing the result of a function invocation as an argument to the next function each time.

If any of the arguments passed to compose() is not a function, the composed function will throw an error whenever it is invoked. Here is a code snippet that describes how compose() works.

* ————————————————-

* Method 1: Functions in sequence

function1( function2( function3(arg)));

* Method 2: Using compose()

* Invoking the composed function has the same effect as (Method 1)

const composedFunction = compose(function1, function2, function3);

composedFunction(arg);

composeAsync() – This function works in the same way as the compose() function. The only difference being that it is asynchronous. Hence, it is ideal for composing functions that have asynchronous behavior – for example, functions that return promises.

enforceHttpsUrl() – This function takes a url string as argument and returns the URL with scheme provided the url begins with either, or //. If the url is not a string then null is returned. Here is an example.

enforceHttpsUrl(”); // returns => ”

enforceHttpsUrl(‘//’); // returns => ”

sanitizeNumber() – This function expects a number or string as argument. If a number is passed to it, it returns the number. However, if a string is passed to it, it removes non-numeric characters from the string and returns the sanitized string. For other value types, it returns null. Here is an example:

sanitizeNumber(53. 56); // returns => 53. 56

sanitizeNumber(‘-2oo, 40’); // returns => ‘-240’

sanitizeNumber(‘cimal’); // returns => ‘. ‘

withoutNulls() – This function expects an array as an argument and returns a new array that only contains the non-null items of the original array. Here is an example.

withoutNulls([ ‘String’, [], null, {}, null, 54]); // returns => [‘String’, [], {}, 54]

arrayPairsToObject() – This function expects an array of ({ key: value}) objects, and returns a transformed object with the keys and values. Here is an example.

const pairs = [ { key1: ‘value1’}, { key2: ‘value2’}, { key3: ‘value3’}];

arrayPairsToObject(pairs); // returns => { key1: ‘value1’, key2: ‘value2’, key3: ‘value3’}

fromPairsToObject() – This is a composed function created using compose(). It has the same effect as executing:

arrayPairsToObject( withoutNulls(array));

Request and Response Helper Functions

Add the following to the app/ file.

app/*

* Handles the request(Promise) when it is fulfilled

* and sends a JSON response to the HTTP response stream(res).

const sendResponse = res => async request => {

return await request

(data => ({ status: “success”, data}))

(({ status: code = 500}) =>

(code)({ status: “failure”, code, message: code == 404? ‘Not found. ‘: ‘Request failed. ‘}));};

* Loads the html string returned for the given URL

* and sends a Cheerio parser instance of the loaded HTML

const fetchHtmlFromUrl = async url => {

return await axios

(enforceHttpsUrl(url))

(response => ())

(error => {

= (sponse &&) || 500;

throw error;});};

Here, we have added two new functions: sendResponse() and fetchHtmlFromUrl(). Let’s try to understand what they do.

sendResponse() – This is a higher-order function that expects an Express HTTP response stream (res) as its argument and returns an async function. The returned async function expects a promise or a thenable as its argument (request).

If the request promise resolves, then a successful JSON response is sent using (), containing the resolved data. If the promise rejects, then an error JSON response with an appropriate HTTP status code is sent. Here is how it can be used in an Express route:

(‘/path’, (req, res, next) => {

const request = solve([1, 2, 3, 4, 5]);

sendResponse(res)(request);});

Making a GET request to the /path endpoint will return this JSON response:

{

“status”: “success”,

“data”: [1, 2, 3, 4, 5]}

fetchHtmlFromUrl() – This is an async function that expects a url string as its argument. First, it uses () to fetch the content of the URL (which returns a promise). If the promise resolves, it uses () with the returned content to create a Cheerio parser instance, and then returns the instance. However, if the promise rejects, it throws an error with an appropriate status code.

The Cheerio parser instance that is returned by this function will enable us extract the data we require. We can use it in much similar ways as we use the jQuery instance returned by calling $() or jQuery() on a DOM target.

DOM Parsing Helper Functions

Let’s add some additional functions to help us with DOM parsing. Add the following content to the app/ file.

// HTML PARSING HELPER FUNCTIONS

* Fetches the inner text of the element

* and returns the trimmed text

const fetchElemInnerText = elem => ( && ()()) || null;

* Fetches the specified attribute from the element

* and returns the attribute value

const fetchElemAttribute = attribute => elem =>

( && (attribute)) || null;

* Extract an array of values from a collection of elements

* using the extractor function and returns the array

* or the return value from calling transform() on array

const extractFromElems = extractor => transform => elems => $ => {

const results = ((i, element) => extractor($(element)))();

return Function(transform)? transform(results): results;};

* A composed function that extracts number text from an element,

* sanitizes the number text and returns the parsed integer

const extractNumber = compose(parseInt, sanitizeNumber, fetchElemInnerText);

* A composed function that extracts url string from the element’s attribute(attr)

* and returns the url with scheme

const extractUrlAttribute = attr =>

compose(enforceHttpsUrl, fetchElemAttribute(attr));

module. exports = {

compose,

composeAsync,

enforceHttpsUrl,

sanitizeNumber,

withoutNulls,

arrayPairsToObject,

fromPairsToObject,

sendResponse,

fetchHtmlFromUrl,

fetchElemInnerText,

fetchElemAttribute,

extractFromElems,

extractNumber,

extractUrlAttribute};

We’ve added a few more functions. Here are the functions and what they do:

fetchElemInnerText() – This function expects an element as argument. It extracts the innerText of the element by calling (), it trims the text of surrounding whitespaces and returns the trimmed inner text. Here is an example.

const $ = (‘

‘);

const elem = $(‘div. fullname’);

fetchElemInnerText(elem); // returns => ‘Glad Chinda’

fetchElemAttribute() – This is a higher-order function that expects an attribute as argument and returns another function that expects an element as argument. The returned function extracts the value of the given attribute of the element by calling (attribute). Here is an example.

const $ = (‘

‘);

const elem = $(‘ername’);

// fetchTitle is a function that expects an element as argument

const fetchTitle = fetchElemAttribute(‘title’);

fetchTitle(elem); // returns => ‘Glad Chinda’

extractFromElems() – This is a higher-order function that returns another higher-order function. Here, we have used a functional programming technique known as currying to create a sequence of functions each requiring just one argument. Here is the sequence of arguments:

extractorFunction -> transformFunction -> elementsCollection -> cheerioInstance

extractFromElems() makes it possible to extract data from a collection of similar elements using an extractor function, and also transform the extracted data using a transform function. The extractor function receives an element as an argument, while the transform function receives an array of values as an argument.

Let’s say we have a collection of elements, each containing the name of a person as innerText. We want to extract all these names and return them in an array, all in uppercase. Here is how we can do this using extractFromElems():

const $ = (‘

‘);

// Get the collection of span elements containing names

const elems = $(‘ span’);

// The transform function

const transformUpperCase = values => (val => String(val). toUpperCase());

// The arguments sequence: extractorFn => transformFn => elemsCollection => cheerioInstance($)

// fetchElemInnerText is used as extractor function

const extractNames = extractFromElems(fetchElemInnerText)(transformUpperCase)(elems);

// Finally pass in the cheerioInstance($)

extractNames($); // returns => [‘GLAD CHINDA’, ‘JOHN DOE’, ‘BRENDAN EICH’]

extractNumber() – This is a composed function that expects an element as argument and tries to extract a number from the innerText of the element. It does this by composing parseInt(), sanitizeNumber() and fetchElemInnerText(). It has the same effect as executing:

parseInt( sanitizeNumber( fetchElemInnerText(elem)));

extractUrlAttribute() – This is a composed higher-order function that expects an attribute as argument and returns another function that expects an element as argument. The returned function tries to extract the URL value of an attribute in the element and returns it with the scheme. Here is a snippet that shows how it works:

// METHOD 1

const fetchAttribute = fetchElemAttribute(attr);

enforceHttpsUrl( fetchAttribute(elem));

// METHOD 2: Using extractUrlAttribute()

const fetchUrlAttribute = extractUrlAttribute(attr);

fetchUrlAttribute(elem);

Finally, we export all the helper functions we have created using module. exports. Now that we have our helper functions, we can proceed to the web scraping part of this tutorial.

Step 5 — Set Up Scraping by Calling the URL

Create a new file named in the app directory of your project and add the following content to it:

const _ = require(‘lodash’);

// Import helper functions

const {

extractUrlAttribute} = require(“. /helpers”);

// (Base URL)

const SCOTCH_BASE = “;

// HELPER FUNCTIONS

* Resolves the url as relative to the base scotch url

* and returns the full URL

const scotchRelativeUrl = url =>

String(url)? `${SCOTCH_BASE}${place(/^\/*? /, “/”)}`: null;

* A composed function that extracts a url from element attribute,

* resolves it to the Scotch base url and returns the url with

const extractScotchUrlAttribute = attr =>

compose(enforceHttpsUrl, scotchRelativeUrl, fetchElemAttribute(attr));

As you can see, we imported lodash as well as some of the helper functions we created earlier. We also defined a constant named SCOTCH_BASE that contains the base URL of the Scotch website. Finally, we added two helper functions:

scotchRelativeUrl() – This function takes a relative url string as argument and returns the URL with the pre-configured SCOTCH_BASE prepended to it. Here is an example.

scotchRelativeUrl(‘tutorials’); // returns => ”

scotchRelativeUrl(‘//tutorials’); // returns => ”

scotchRelativeUrl(”); // returns => ”

extractScotchUrlAttribute() – This is a composed higher-order function that expects an attribute as argument and returns another function that expects an element as argument. The returned function tries to extract the URL value of an attribute in the element, prepends the pre-configured SCOTCH_BASE to it, and returns it with the scheme. Here is a snippet that shows how it works:

enforceHttpsUrl( scotchRelativeUrl( fetchAttribute(elem)));

// METHOD 2: Using extractScotchUrlAttribute()

const fetchUrlAttribute = extractScotchUrlAttribute(attr);

We want to be able to extract the following data for any Scotch author:

profile: (name, role, avatar, etc. )

social links: (Facebook, Twitter, GitHub, etc. )

stats: (total views, total posts, etc. )

posts

If you recall, the extractFromElems() helper function we created earlier requires an extractor function for extracting content from a collection of similar elements. We are going to define some extractor functions in this section.

First, we will create an extractSocialUrl() function for extracting the social network name and URL from a social link element. Here is the DOM structure of the social link element expected by extractSocialUrl().

Calling the extractSocialUrl() function should return an object that looks like the following:

{ github: ”}

Let’s go on to create the function. Add the following content to the app/ file.

// EXTRACTION FUNCTIONS

* Extract a single social URL pair from container element

const extractSocialUrl = elem => {

// Find all social-icon elements

const icon = (”);

// Regex for social classes

const regex = /^(? :icon|color)-(. +)$/;

// Extracts only social classes from the class attribute

const onlySocialClasses = regex => (classes = ”) => classes. replace(/\s+/g, ‘ ‘)

(‘ ‘)

(classname => (classname));

// Gets the social network name from a class name

const getSocialFromClasses = regex => classes => {

let social = null;

const [classname = null] = classes;

if (String(classname)) {

const _[_, name = null] = (regex);

social = name? akeCase(name): null;}

return social;};

// Extract the href URL from the element

const href = extractUrlAttribute(‘href’)(elem);

// Get the social-network name using a composed function

const social = compose(

getSocialFromClasses(regex),

onlySocialClasses(regex),

fetchElemAttribute(‘class’))(icon);

// Return an object of social-network-name(key) and social-link(value)

// Else return null if no social-network-name was found

return social && { [social]: href};};

Let’s try to understand how the extractSocialUrl() function works:

First, we fetch the child element with an icon class. We also define a regular expression that matches social-icon class names.

We define onlySocialClasses() higher-order function that takes a regular expression as its argument and returns a function. The returned function takes a string of class names separated by spaces. It then uses the regular expression to extract only the social class names from the list and returns them in an array. Here is an example:

const extractSocial = onlySocialClasses(regex);

const classNames = ‘first-class another-class color-twitter icon-github’;

extractSocial(classNames); // returns [ ‘color-twitter’, ‘icon-github’]

Next, we define getSocialFromClasses() higher-order function that takes a regular expression as its argument and returns a function. The returned function takes an array of single class strings. It then uses the regular expression to extract the social network name from the first class in the list and returns it. Here is an example:

const extractSocialName = getSocialFromClasses(regex);

const classNames = [ ‘color-twitter’, ‘icon-github’];

extractSocialName(classNames); // returns ‘twitter’

Afterwards, we extract the href attribute URL from the element. We also extract the social network name from the icon element using a composed function created by composing getSocialFromClasses(regex), onlySocialClasses(regex) and fetchElemAttribute(‘class’).

Finally, we return an object with the social network name as key and the href URL as value. However, if no social network was fetched, then null is returned. Here is an example of the returned object:

{ twitter: ”}

Extracting Posts and Stats

We will go ahead to create two additional extraction functions namely: extractPost() and extractStat(), for extracting posts and stats respectively. Before we create the functions, let’s take a look at the DOM structure of the elements expected by these functions.

Here is the DOM structure of the element expected by extractPost().

Password Strength Meter in AngularJS

Here is the DOM structure of the element expected by extractStat().

Add the following content to the app/ file.

* Extract a single post from container element

const extractPost = elem => {

const title = (‘. card__title a’);

const image = (‘a**[**data-src]’);

const views = (“a**[**title=’Views’] span”);

const comments = (“a**[**title=’Comments’] mment-number”);

return {

title: fetchElemInnerText(title),

image: extractUrlAttribute(‘data-src’)(image),

url: extractScotchUrlAttribute(‘href’)(title),

views: extractNumber(views),

comments: extractNumber(comments)};};

* Extract a single stat from container element

const extractStat = elem => {

const statElem = (“”)

const labelElem = (”);

const lowercase = val => String(val)? LowerCase(): null;

const stat = extractNumber(statElem);

const label = compose(lowercase, fetchElemInnerText)(labelElem);

return { [label]: stat};};

The extractPost() function extracts the title, image, URL, views, and comments of a post by parsing the children of the given element. It uses a couple of helper functions we created earlier to extract data from the appropriate elements.

Here is an example of the object returned from calling extractPost().

title: “Password Strength Meter in AngularJS”,

image: “,

url: “,

views: 24280,

comments: 5}

The extractStat() function extracts the stat data contained in the given element. Here is an example of the object returned from calling extractStat().

{ pageviews: 41454}

Now we will proceed to define the extractAuthorProfile() function that extracts the complete profile of the Scotch author. Add the following content to the app/ file.

* Extract profile from a Scotch author’s page using the Cheerio parser instance

* and returns the author profile object

const extractAuthorProfile = $ => {

const mainSite = $(‘#sitemain’);

const metaScotch = $(“meta**[**property=’og:url’]”);

const scotchHero = (”);

const superGrid = (”);

const authorTitle = (“. profilename “);

const profileRole = (“”);

const profileAvatar = (“ofileavatar”);

const profileStats = (“. profilestats. profilestat”);

const authorLinks = (” a**[**target=’_blank’]”);

const authorPosts = (” **[**data-type=’post’]”);

const extractPosts = extractFromElems(extractPost)();

const extractStats = extractFromElems(extractStat)(fromPairsToObject);

const extractSocialUrls = extractFromElems(extractSocialUrl)(fromPairsToObject);

return (**[**

fetchElemInnerText(ntents()()),

fetchElemInnerText(profileRole),

extractUrlAttribute(‘content’)(metaScotch),

extractUrlAttribute(‘src’)(profileAvatar),

extractSocialUrls(authorLinks)($),

extractStats(profileStats)($),

extractPosts(authorPosts)($)])((**[** author, role, url, avatar, social, stats, posts]) => ({ author, role, url, avatar, social, stats, posts}));};

* Fetches the Scotch profile of the given author

const fetchAuthorProfile = author => {

const AUTHOR_URL = `${SCOTCH_BASE}/@${LowerCase()}`;

return composeAsync(extractAuthorProfile, fetchHtmlFromUrl)(AUTHOR_URL);};

module. exports = { fetchAuthorProfile};

The extractAuthorProfile() function is very straight-forward. We first use $ (the cheerio parser instance) to find a couple of elements and element collections.

Next, we use the extractFromElems() helper function together with the extractor functions we created earlier in this section (extractPost, extractStat and extractSocialUrl) to create higher-order extraction functions. Notice how we use the fromPairsToObject helper function we created earlier as a transform function.

Finally, we use () to extract all the required data, leveraging on a couple of helper functions we created earlier. The extracted data is contained in an array structure following this sequence: author name, role, Scotch link, avatar link, social links, stats, and posts.

Notice how we use destructuring in the () promise handler to construct the final object that is returned when all the promises resolve. The returned object should look like the following:

author: ‘Glad Chinda’,

role: ‘Author’,

url: ”,

avatar: ”,

social: {

twitter: ”,

github: ”},

stats: {

posts: 6,

pageviews: 41454,

readers: 31676},

posts: [

title: ‘Password Strength Meter in AngularJS’,

image: ”,

comments: 5},… ]}

We also define the fetchAuthorProfile() function that accepts an author’s Scotch username and returns a Promise that resolves to the profile of the author. For an author whose username is gladchinda, the Scotch URL is

fetchAuthorProfile() uses the composeAsync() helper function to create a composed function that first fetches the DOM content of the author’s Scotch page using the fetchHtmlFromUrl() helper function, and finally extracts the profile of the author using the extractAuthorProfile() function we just created.

Finally, we export fetchAuthorProfile as the only identifier in the module. exports object.

Step 8 — How to Create a Route

We are almost done with our API. We need to add a route to our server to enable us to fetch the profile of any Scotch author. The route will have the following structure, where the author parameter represents the username of the Scotch author.

GET /scotch/:author

Let’s go ahead and create this route. We will make a couple of changes to the file. First, add the following to the file to require some of the functions we need.

Require the needed functions

const { sendResponse} = require(‘. /app/helpers’);

const { fetchAuthorProfile} = require(‘. /app/scotch’);

Finally, add the route to the file immediately after the middlewares.

Add the Scotch author profile route

(‘/scotch/:author’, (req, res, next) => {

const author =;

sendResponse(res)(fetchAuthorProfile(author));});

As you can see, we pass the author received from the route parameter to the fetchAuthorProfile() function to get the profile of the given author. We then use the sendResponse() helper method to send the returned profile as a JSON response.

We have successfully built our API using a web scraping technique. Go ahead and test the API by running npm start command on your terminal. Launch your favorite HTTP testing tool e. g Postman and test the API endpoint. If you followed all the steps correctly, you should have a result that looks like the following demo:

Conclusion

In this tutorial, we have seen how we can employ web scraping techniques (especially DOM parsing) to extract data from a website. We used the Cheerio package to parse the content of a webpage using available DOM methods in a much similar fashion as the popular jQuery library. Note however that Cheerio has its limitations. You can achieve more advanced parsing using headless browsers like JSDOM and PhantomJS.

Frequently Asked Questions about web scraping node

Is node good for web scraping?

Luckily for JavaScript developers, there are a variety of tools available in Node. js for scraping and parsing data directly from websites to use in your projects and applications.Apr 29, 2020

What is web scraping Nodejs?

Web scraping is the technique of extracting data from websites. … While extracting data from websites can be done manually, web scraping usually refers to an automated process. Web scraping is used by most bots and web crawlers for data extraction.Dec 12, 2019

How do you scrape a website in node?

Steps Required for Web ScrapingCreating the package.json file.Install & Call the required libraries.Select the Website & Data needed to Scrape.Set the URL & Check the Response Code.Inspect & Find the Proper HTML tags.Include the HTML tags in our Code.Cross-check the Scraped Data.Oct 27, 2020